Optimize Memory Fragmentation due to Huge Nginx Configuration

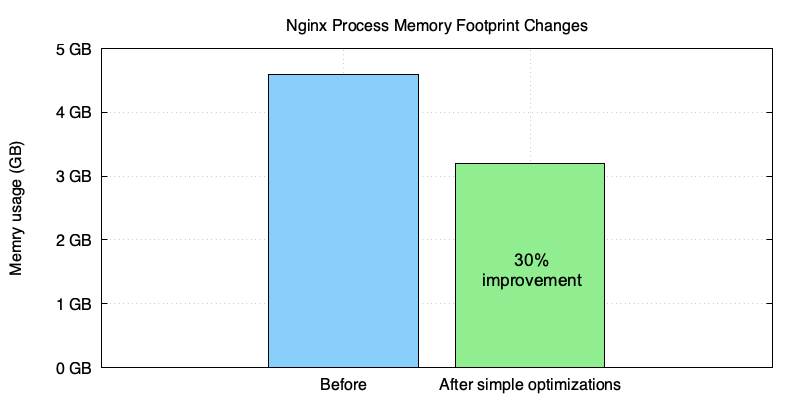

We recently used OpenResty XRay to help an enterprise customer selling CDN services optimize their OpenResty/Nginx servers’ memory usage. This customer has many virtual servers and URI locations defined in their OpenResty/Nginx configuration files. OpenResty XRay did most of the analyses automatically in the customer’s production environment. And the results led to solutions giving about 30% reduction in the memory footprint of the nginx processes.

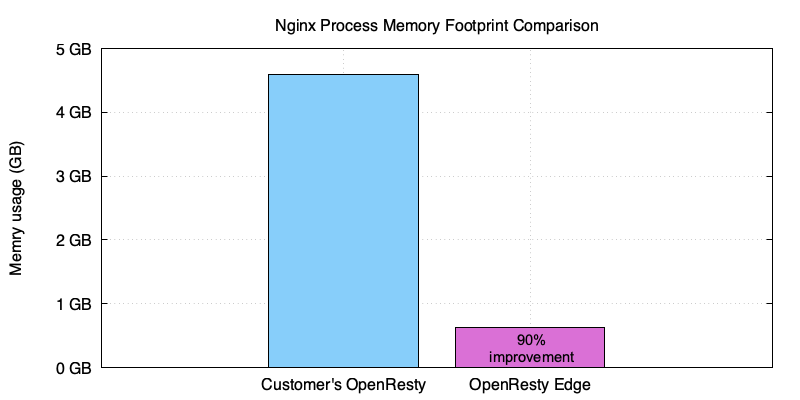

Further optimizations would reduce it further by about 90%, as evidenced by comparing to our OpenResty Edge’s nginx worker processes.

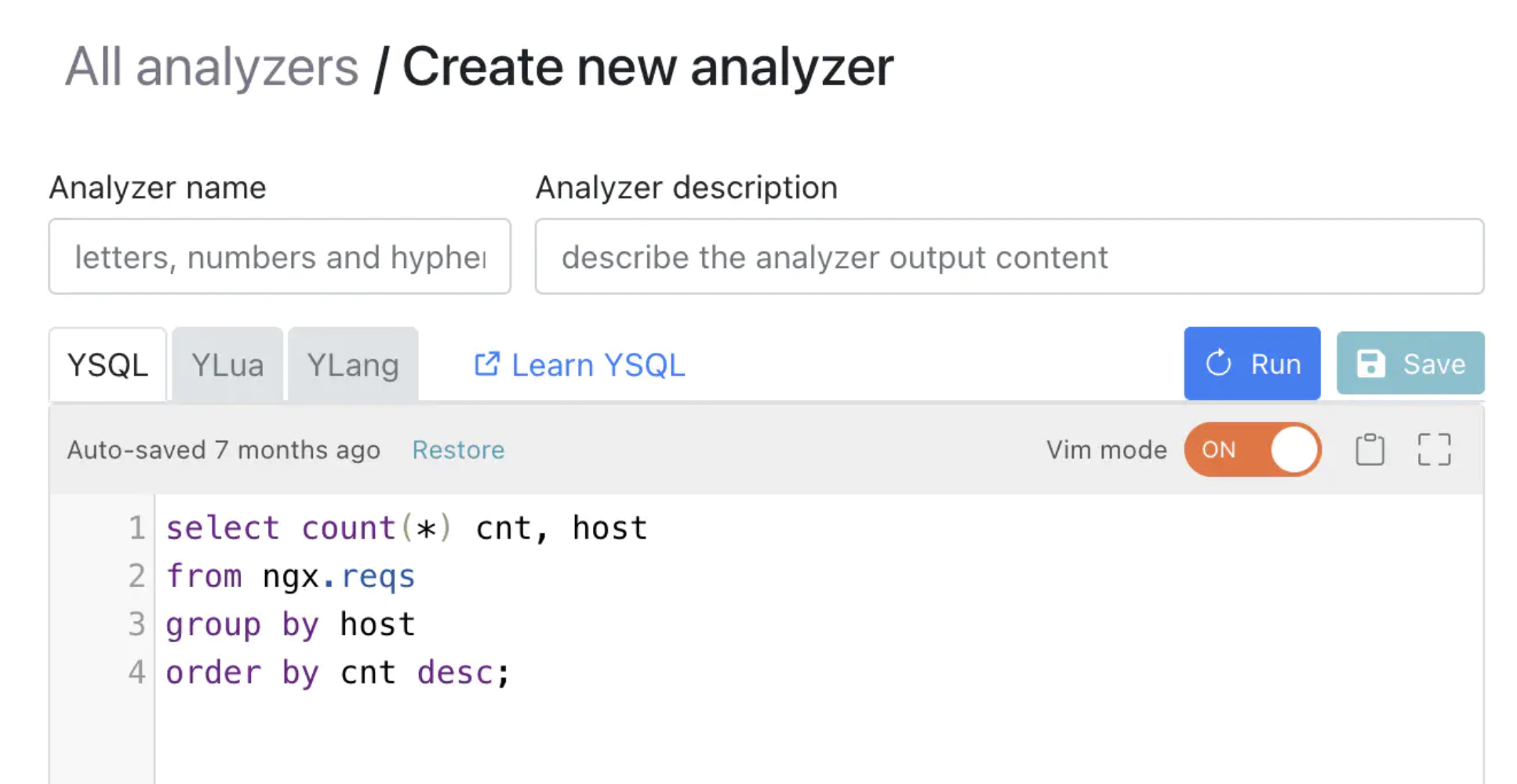

OpenResty XRay is a dynamic-tracing product that automatically analyzes your running applications to troubleshoot performance problems, behavioral issues, and security vulnerabilities with actionable suggestions. Under the hood, OpenResty XRay is powered by our Y language targeting various runtimes like Stap+, eBPF+, GDB, and ODB, depending on the contexts.

The Challenge

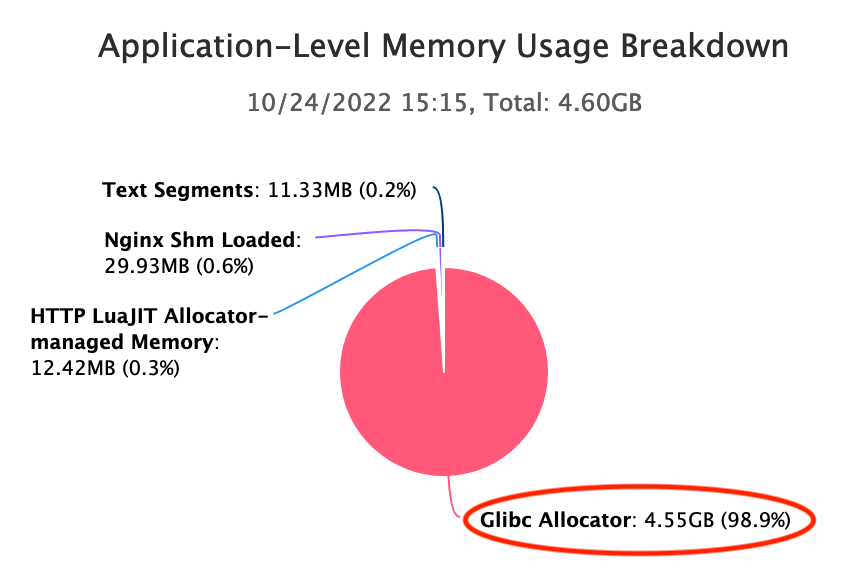

This CDN vendor uses a huge nginx.conf configuration file to serve almost 10K virtual hosts in their OpenResty servers. Each nginx master instance takes gigabytes of memory right after startup and gets nearly doubled after one or several HUP reloads. It maxes out at about 4.60GB of memory footprint, as evidenced by the following chart generated by OpenResty XRay.

We can see from the OpenResty XRay’s application-level memory usage breakdown chart that the Glibc allocator takes the majority of the resident memory, 4.55GB:

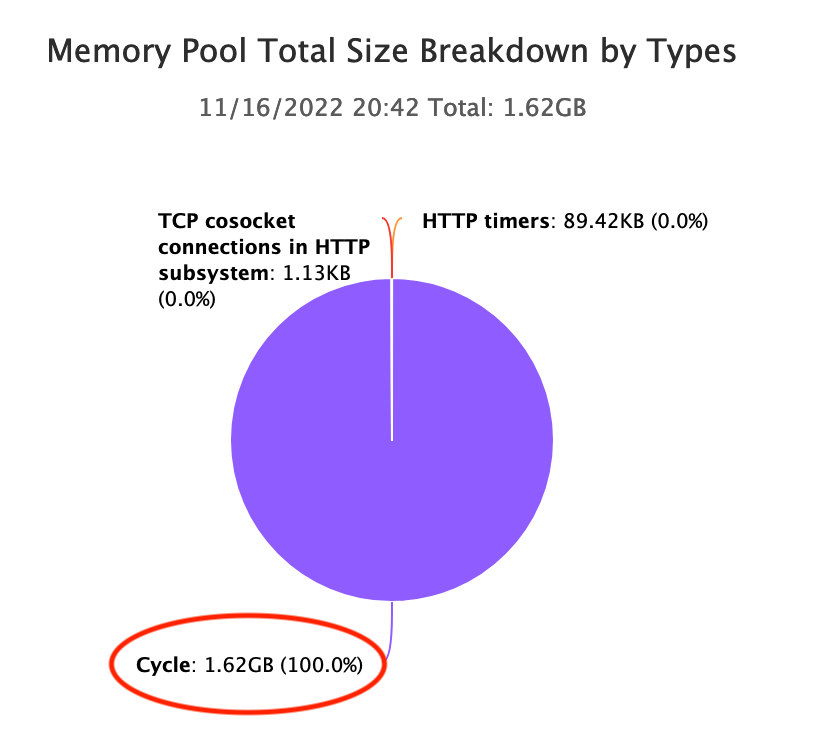

And OpenResty XRay finds that the Nginx cycle memory pool takes a lot of memory:

When Nginx loads the configuration files, we know it allocates data structures for the configuration data inside this cycle pool. It is huge, 1.62GB, but way less than the 4.60GB quantity mentioned above.

RAM is still an expensive and scarce hardware resource, especially on public clouds like AWS and GCP. The customer wants to save costs by downgrading to smaller machines with less memory.

Analyses

OpenResty XRay did quite some deep analyses of the customer’s online processes. It did not require any collaboration from the customer’s applications:

- No extra plugins, modules, or libraries.

- No code injections or patches.

- No special compilation or startup options.

- Not even any need to restart the application processes.

The analyses were done entirely in a postmortem manner. Thanks to the dynamic-tracing technology employed by Openresty XRay.

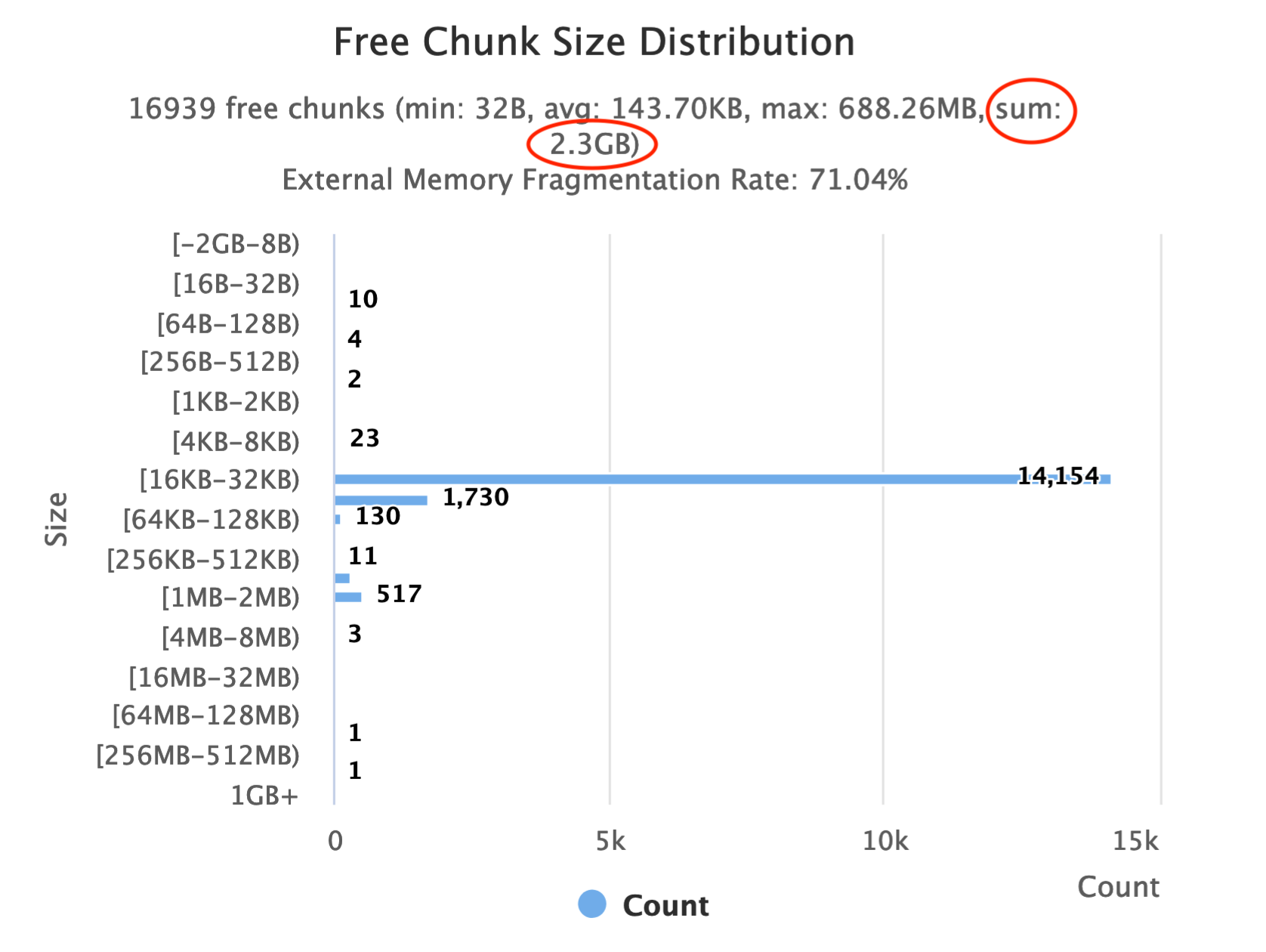

Too Many Free Chunks

OpenResty XRay automatically sampled the online nginx processes with a Glibc memory allocator analyzer. The analyzer generated the following bar chart showing how the sizes of the free chunks managed by the allocator are distributed.

The Glibc allocator does not usually return free chunks immediately to the operating system (OS). It may preserve some free chunks to speed up future allocations. But intentional preservation is generally tiny; it cannot be giga-bytes. Here we see the sum of the free chunk sizes is 2.3GB already. So a more common cause for that is memory fragmentation.

See Memory Fragmentation in the Normal Heap

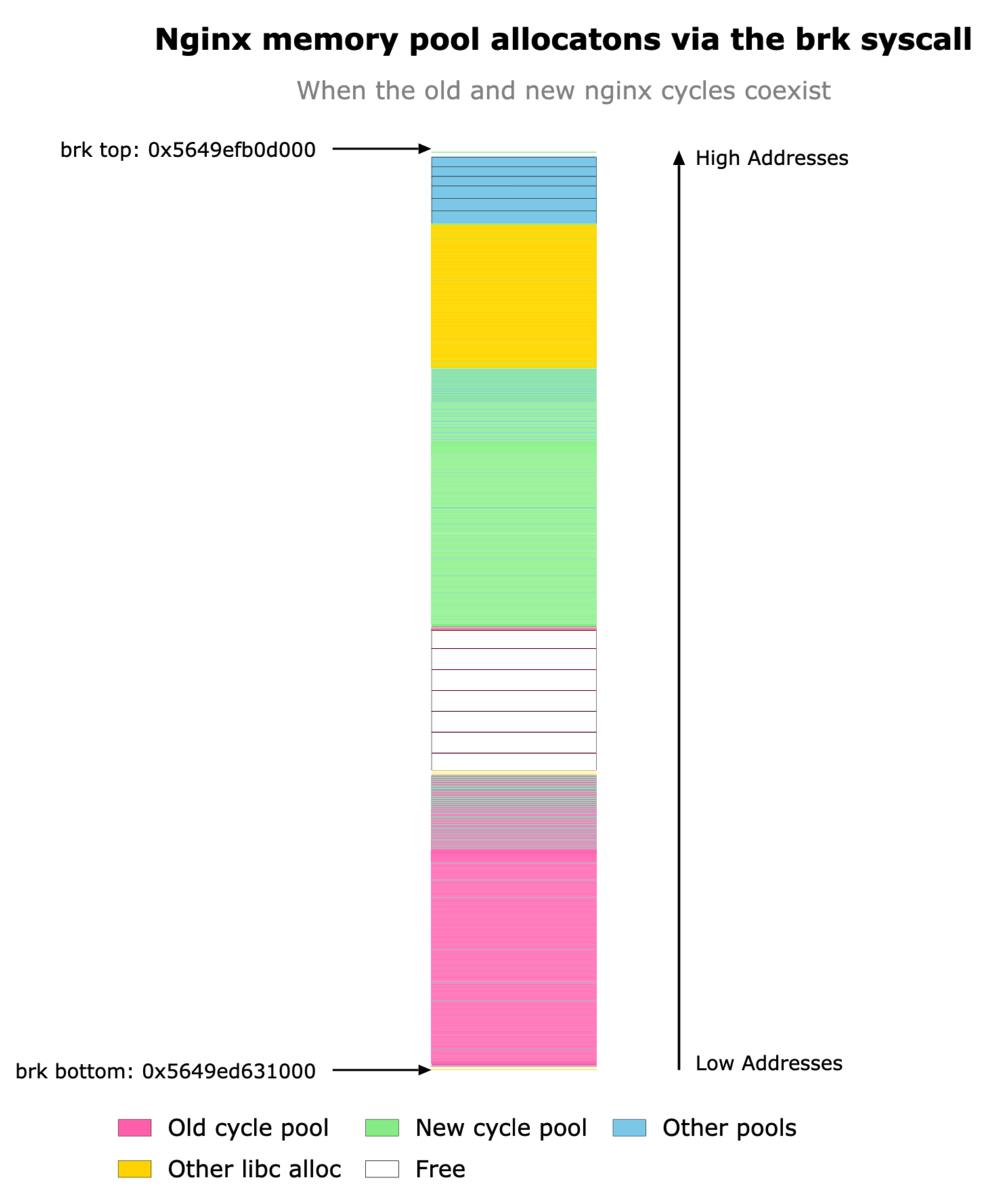

Most small memory allocations happen in the “normal heap” through the brk Linux system call. This heap is like a linear “stack” which can only grow or shrink by moving its “top” pointer. All the free chunks in the heap’s middle cannot get released to the OS. They’ll only get returned until all the chunks above them become free too.

OpenResty XRay’s memory analyzers can help us see the state of such heaps. Look at the following heap diagram sampled right after the nginx master process loads the new configuration in response to a HUP signal.

We can see that the heap grows upwards, i.e., towards high memory addresses. Note the brk top pointer, the only thing that can move. The green boxes belong to the new “cycle pool” of Nginx, while the pink ones belong to the old “cycle pool.” One interesting thing is that Nginx keeps the old cycle pool or the old configuration data until the new one is loaded successfully. This behavior is due to the protection mechanism of Nginx to gracefully fall back to the old configuration when the new one fails to load. Unfortunately, as we can see above, the old configuration data’s boxes (in green) are below the new data (in pink), and thus they can only be returned to the OS when the new one is freed as well.

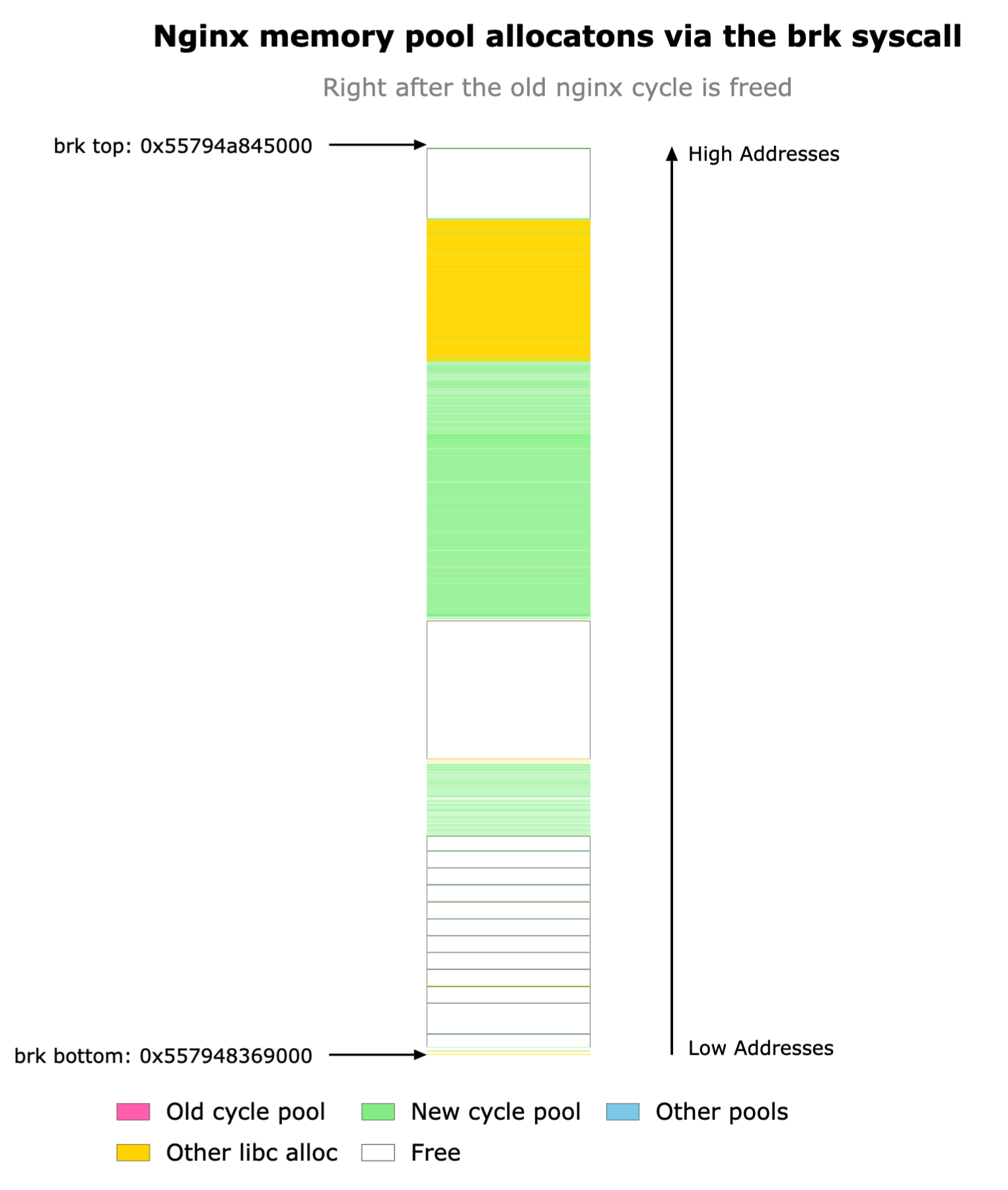

Indeed, after the Nginx frees the old configuration data and the old cycle pool, their original places become free chunks that get stuck below the new cycle pool’s chunks:

It is a textbook example of memory fragmentation. Normal heaps can only release memory at the top; thus, it is more vulnerable to memory fragmentation than other mechanisms of memory allocations, like the mmap* system calls. But will mmap* save us here? Not necessarily.

The mmap World

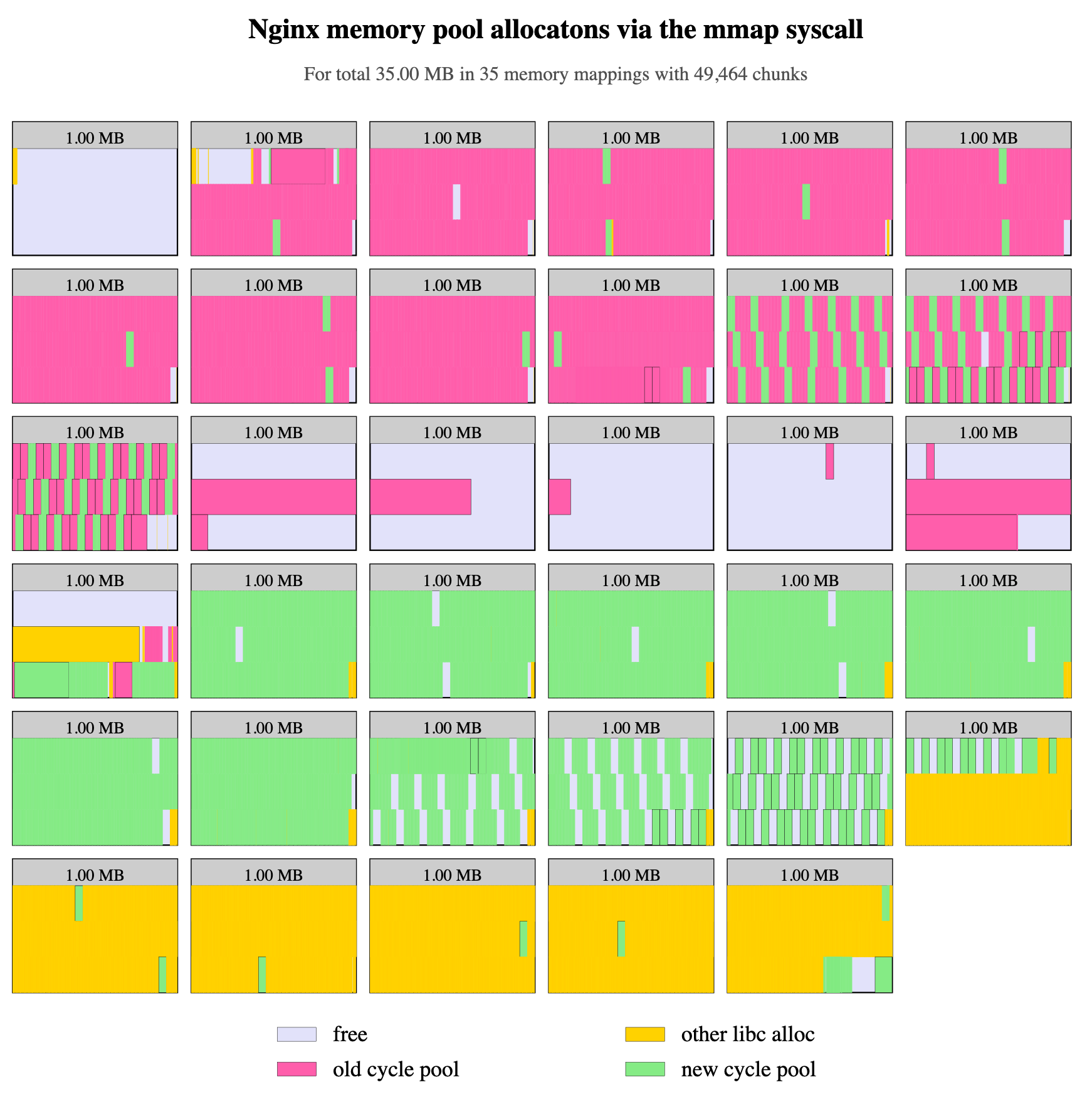

The Glibc allocator can also allocate memory through the mmap* system calls. These system calls allocate discrete memory blocks or segments of memory that may reside at almost any address in the process’s address space and span any number of memory pages.

It sounds like a great way to mitigate the memory fragmentation issue above. But when we intentionally block the normal heap’s way to grow, memory fragmentation of a similar degree still happens, according to the diagram generated by OpenResty XRay’s analyzers.

So Glibc tends to allocate relatively large memory segments, 1MB here, to fulfill smaller memory chunk allocation requests from the application (Nginx here). And therefore, memory fragmentation still happens inside these mmap’d segments of 1MB. If a small chunk is still in use in a segment, then the whole segment won’t get released to the OS.

In the diagram above, we can see the old cycle pool chunks (in pink) and the new pool chunks (in green) still interleave in many mmap’d segments.

Solutions

We proposed several solutions for our customer.

The Easy Way

The easiest way is to tackle the memory fragmentation problem head-on. According to the analyses we did with OpenResty XRay above, we should make one or more of the following changes:

- Avoid allocating cycle pool memory in the “normal heap” (i.e., eliminating the

brksystem calls for such allocations). - Ask Glibc to use appropriate mmap’d segment memory sizes (not too big!) to fulfill memory allocation requests in the cycle pool.

- Cleanly separate the memory chunks of different cycle pools into different mmap’d segments.

We give detailed optimization instructions for our paying customers of OpenResty XRay. So no coding is needed at all.

The Better Way

And yeah, there’s a much better way. The open-source OpenResty software does provide Lua APIs and Nginx configuration directives to dynamically load (and unload) new configuration data on the Lua land without going through the Nginx configuration file mechanism. It makes it possible to use a small constant size of memory to handle many more virtual servers and locations’ configuration data. Also, the Nginx server startup and reload time is much shorter (from many seconds to almost zero). In fact, with dynamic configuration loading, the HUP reload operation itself becomes very rare. One downside of this approach is that this requires some extra Lua coding on our customer side.

Our OpenResty Edge software product implements such dynamic configuration loading and unloading in the best way conceived by the OpenResty author. It does not require any coding by the users. So it is an easy option too.

Results

The customer decides to try the easy way first, resulting in a 30% reduction in the total memory footprint after several HUP reloads.

There are still some remaining fragmentations that deserve further attention. But our customer is already happy enough. Besides, the better way mentioned above can save about 90% (like in our OpenResty Edge product):

About The Author

Yichun Zhang (Github handle: agentzh), is the original creator of the OpenResty® open-source project and the CEO of OpenResty Inc..

Yichun is one of the earliest advocates and leaders of “open-source technology”. He worked at many internationally renowned tech companies, such as Cloudflare, Yahoo!. He is a pioneer of “edge computing”, “dynamic tracing” and “machine coding”, with over 22 years of programming and 16 years of open source experience. Yichun is well-known in the open-source space as the project leader of OpenResty®, adopted by more than 40 million global website domains.

OpenResty Inc., the enterprise software start-up founded by Yichun in 2017, has customers from some of the biggest companies in the world. Its flagship product, OpenResty XRay, is a non-invasive profiling and troubleshooting tool that significantly enhances and utilizes dynamic tracing technology. And its OpenResty Edge product is a powerful distributed traffic management and private CDN software product.

As an avid open-source contributor, Yichun has contributed more than a million lines of code to numerous open-source projects, including Linux kernel, Nginx, LuaJIT, GDB, SystemTap, LLVM, Perl, etc. He has also authored more than 60 open-source software libraries.