Enable HTTP Cache in OpenResty Edge

Today I’d demonstrate how to enable HTTP response caching in OpenResty Edge.

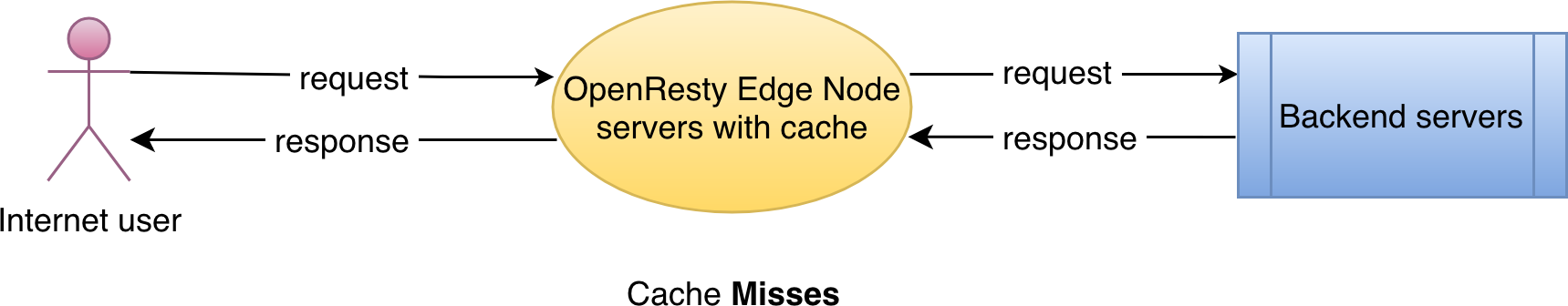

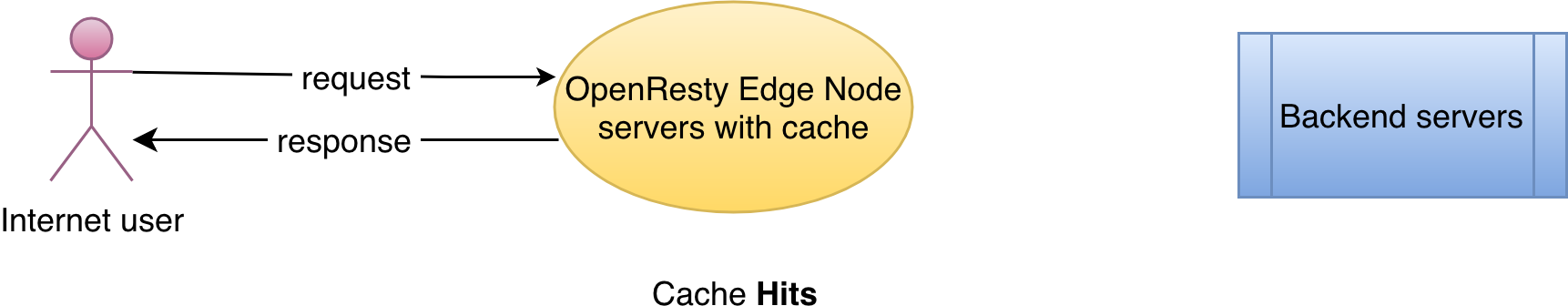

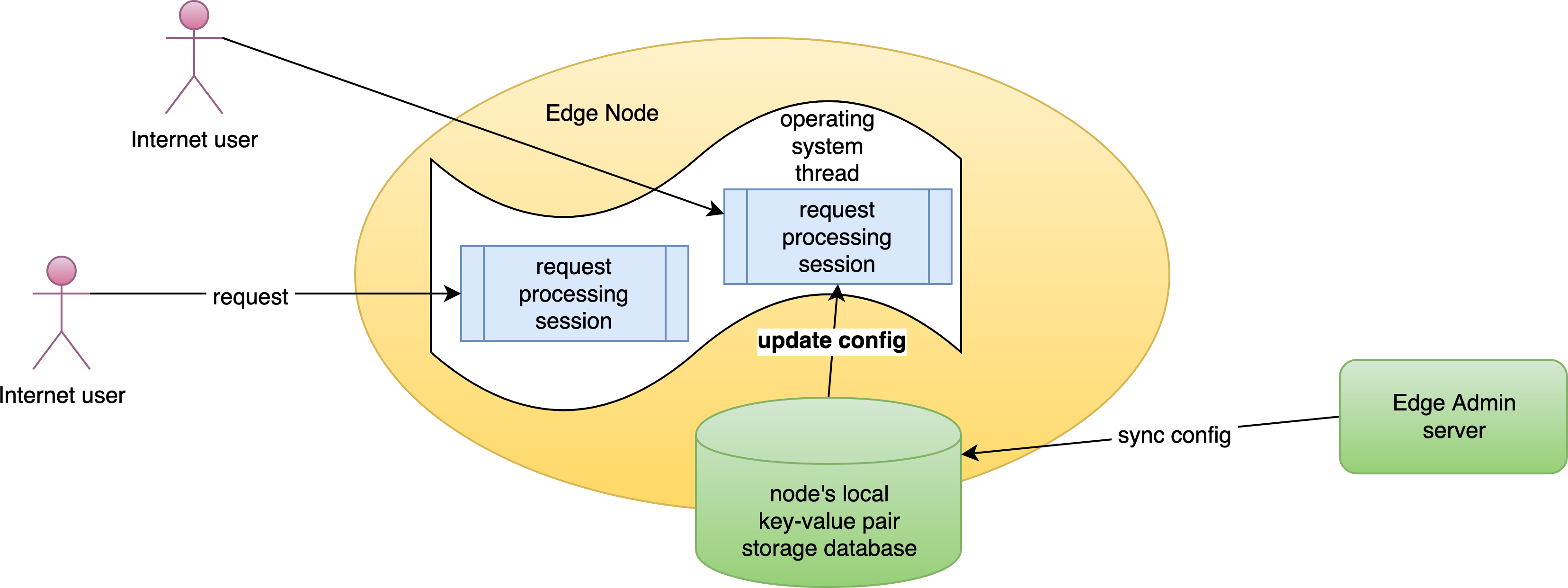

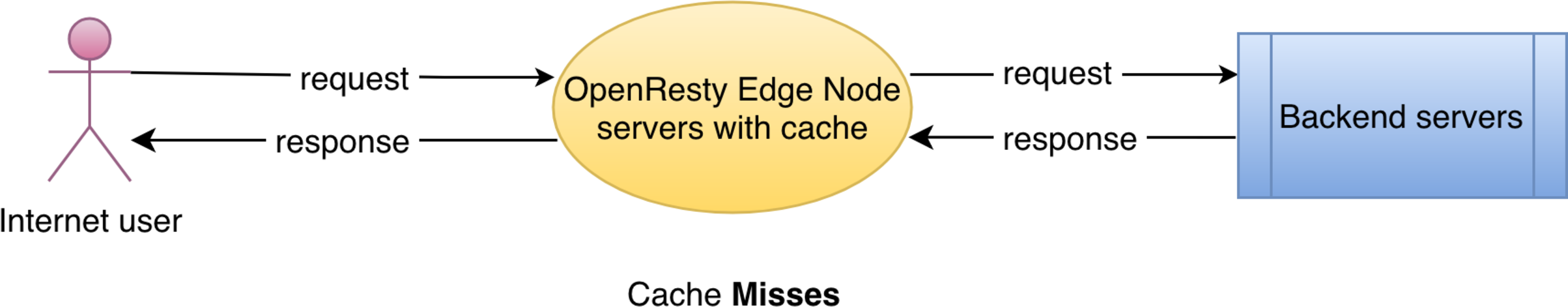

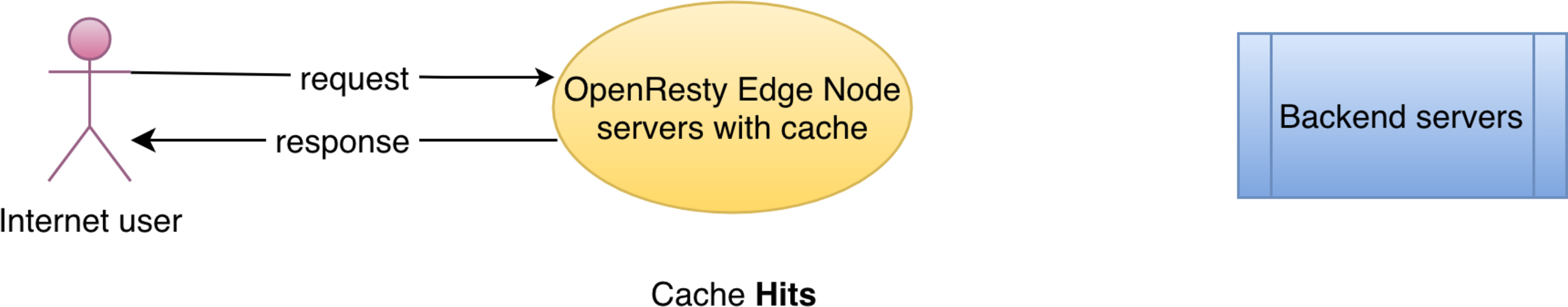

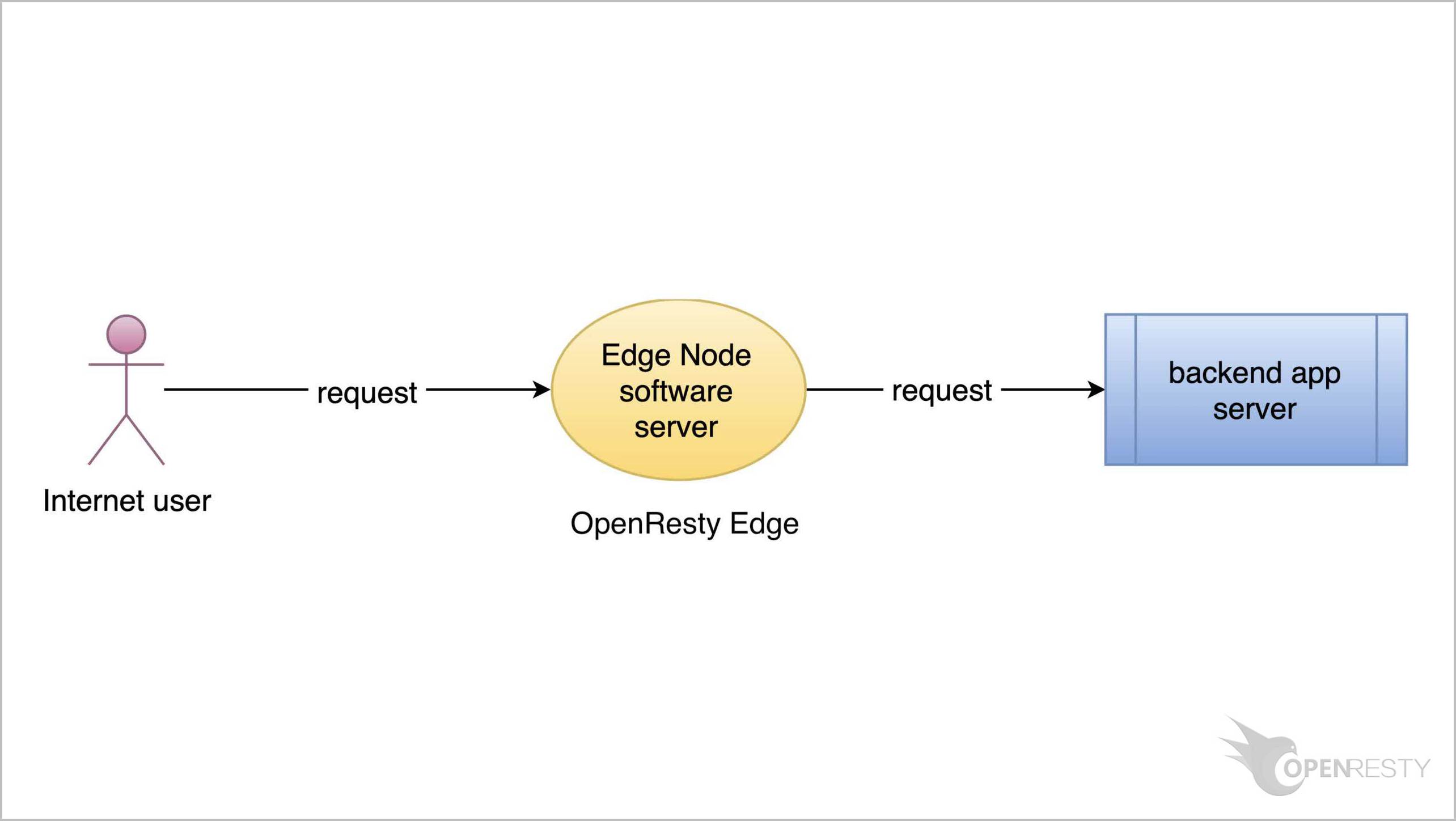

When the client requests hit the cache on the Edge Node server, no requests need to be sent to the backend server. This reduces response latency and saves network bandwidth.

Enable response caching for the sample application

Let’s go to the OpenResty Edge’s Admin web console. This is our sample deployment of the console. Every user has her own deployment.

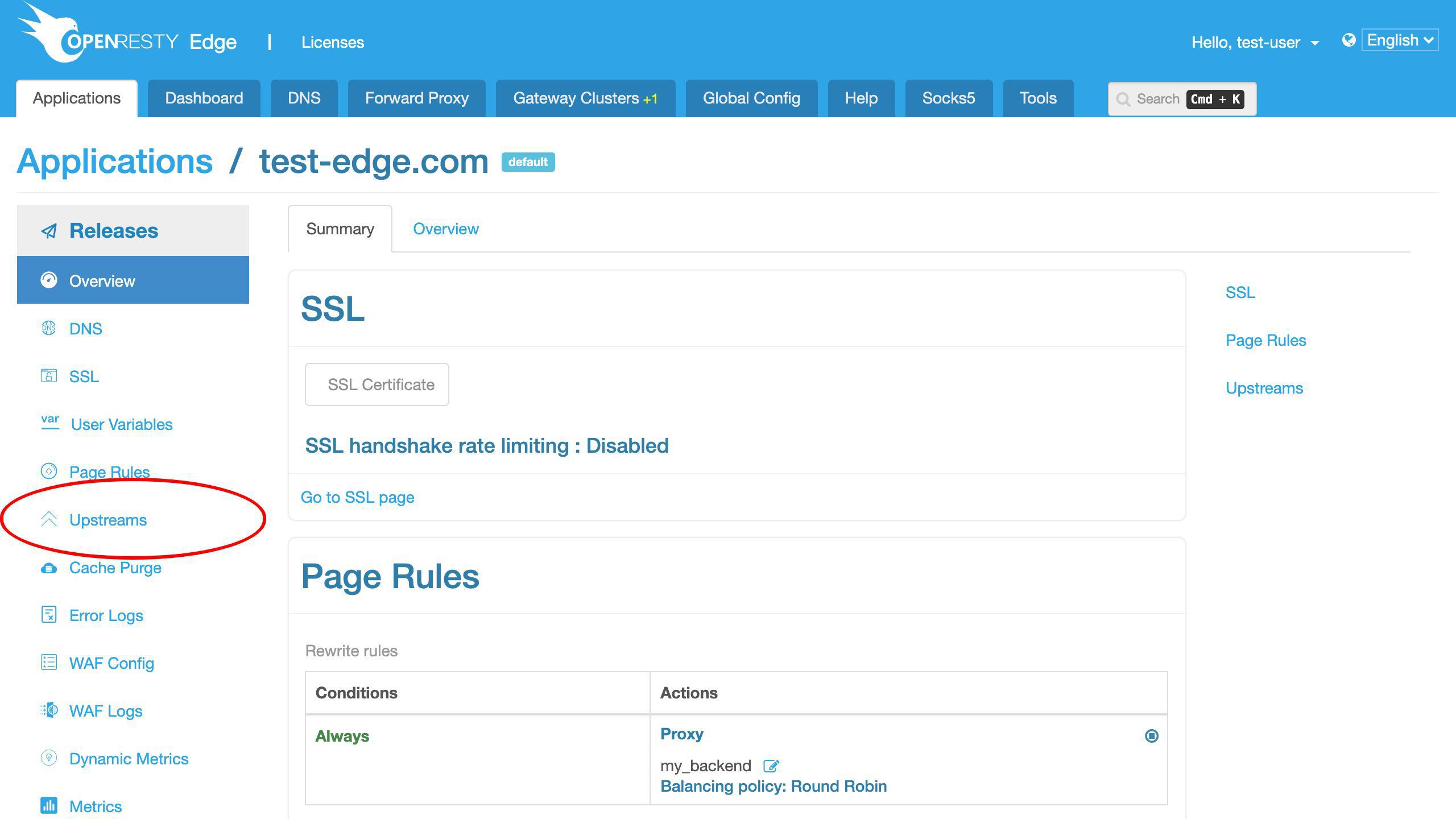

We can continue with our previous application example, test-edge.com.

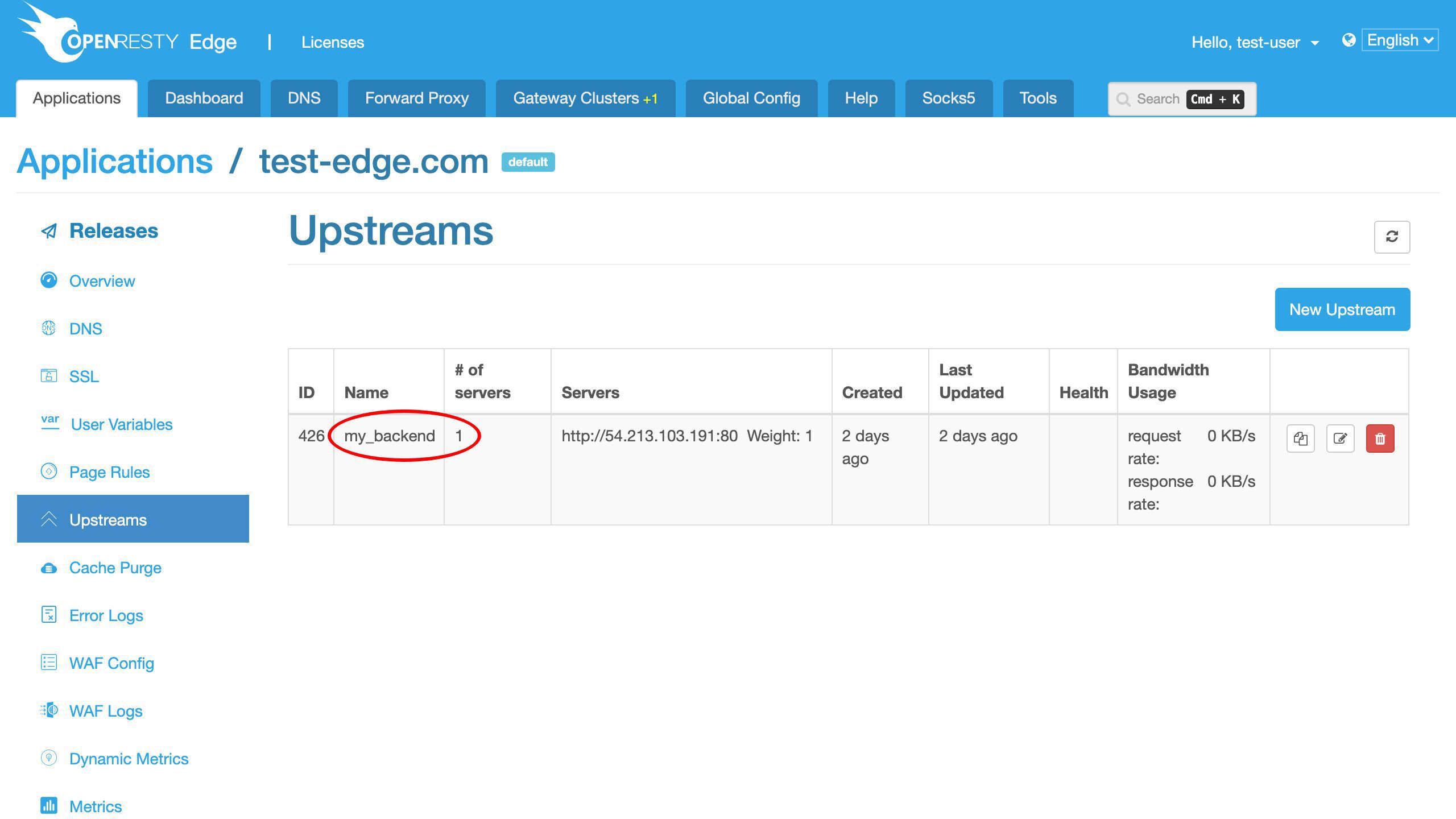

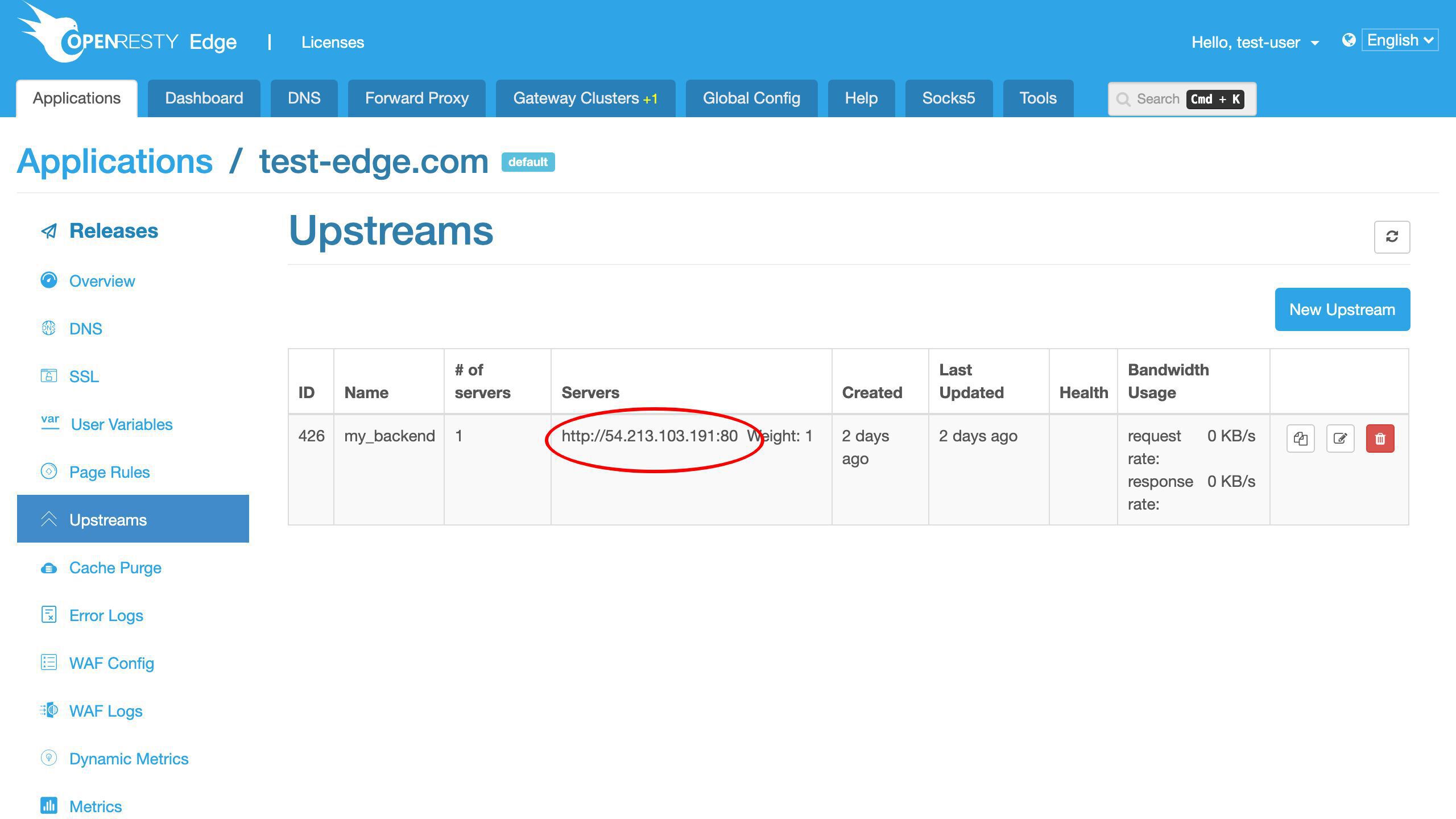

We already have an upstream defined.

This my_backend upstream has only 1 backend server.

Note the IP address of the backend server ends with number 191. We will use this IP address later.

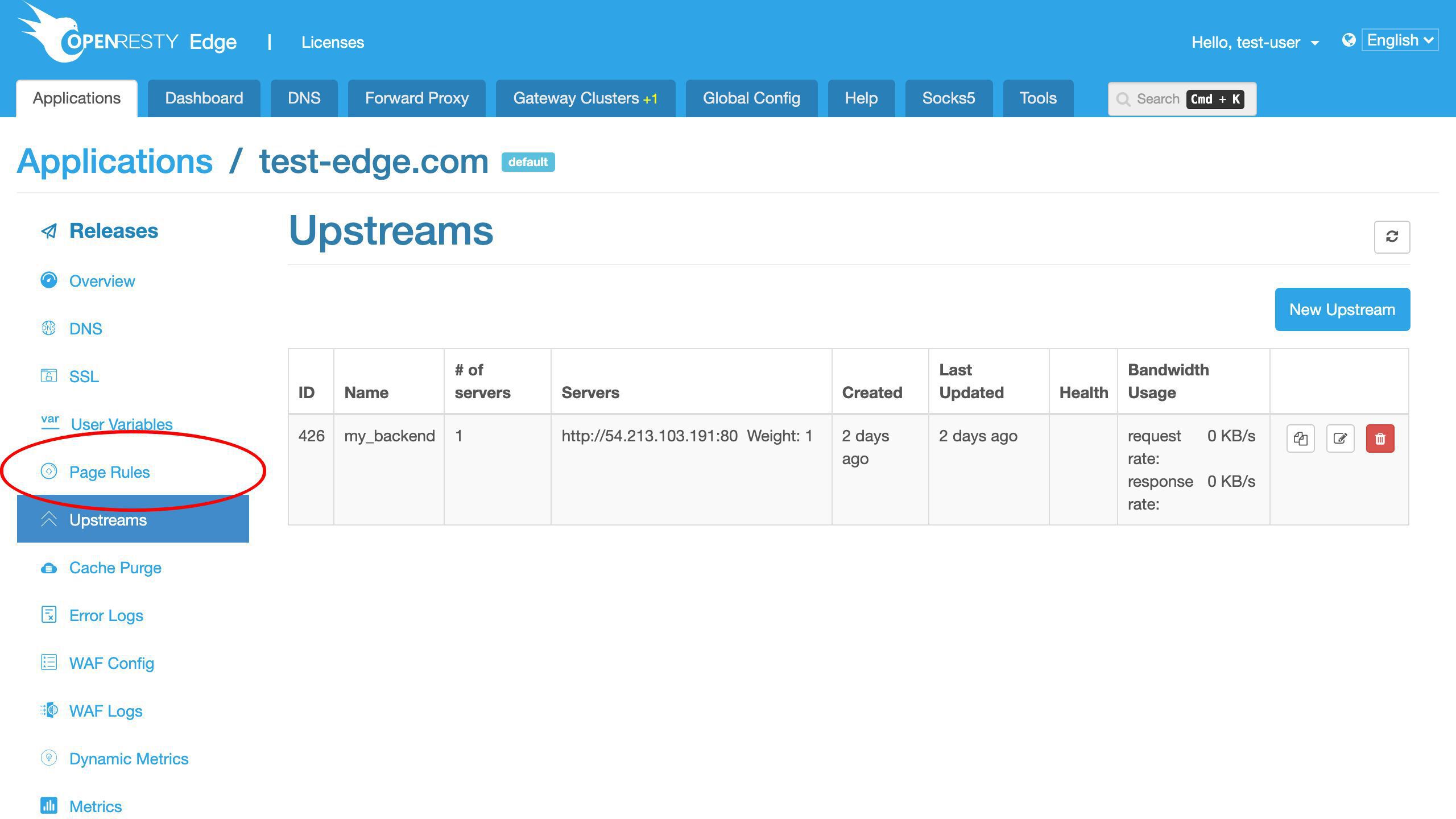

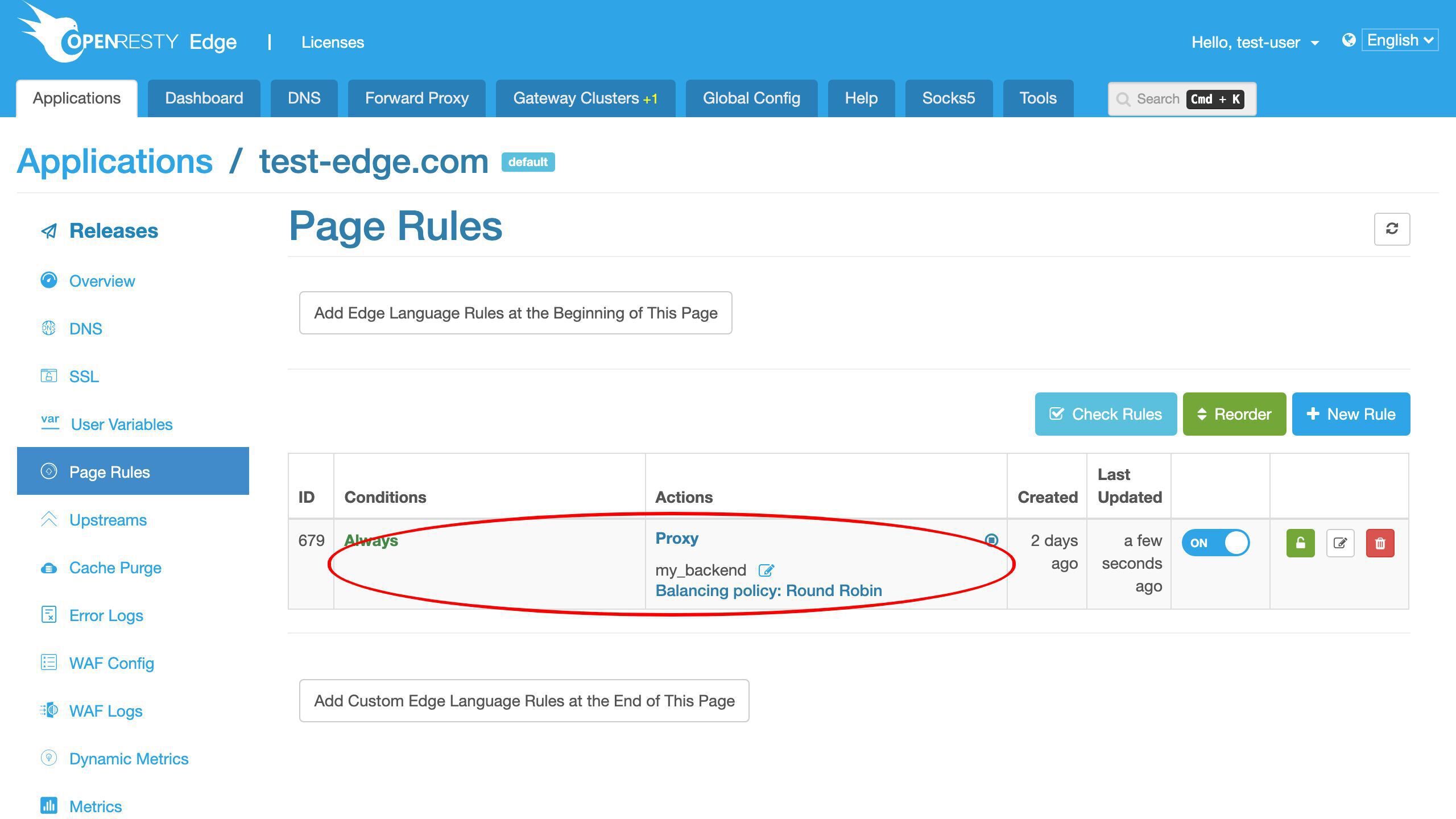

And we also have a page rule already defined.

This page rule sets up a reverse proxy to this upstream.

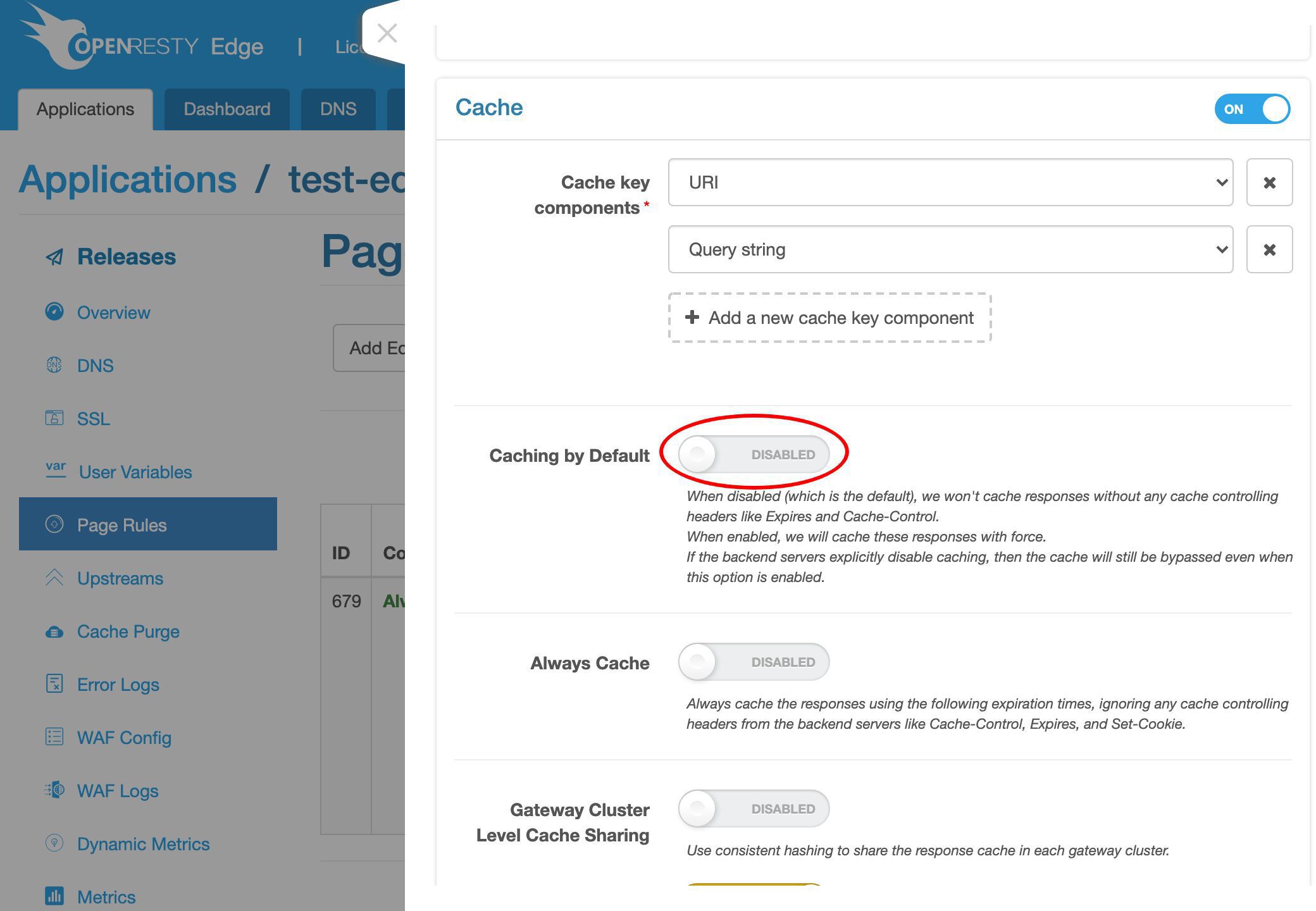

Apparently we haven’t enabled the proxy cache for this page rule yet.

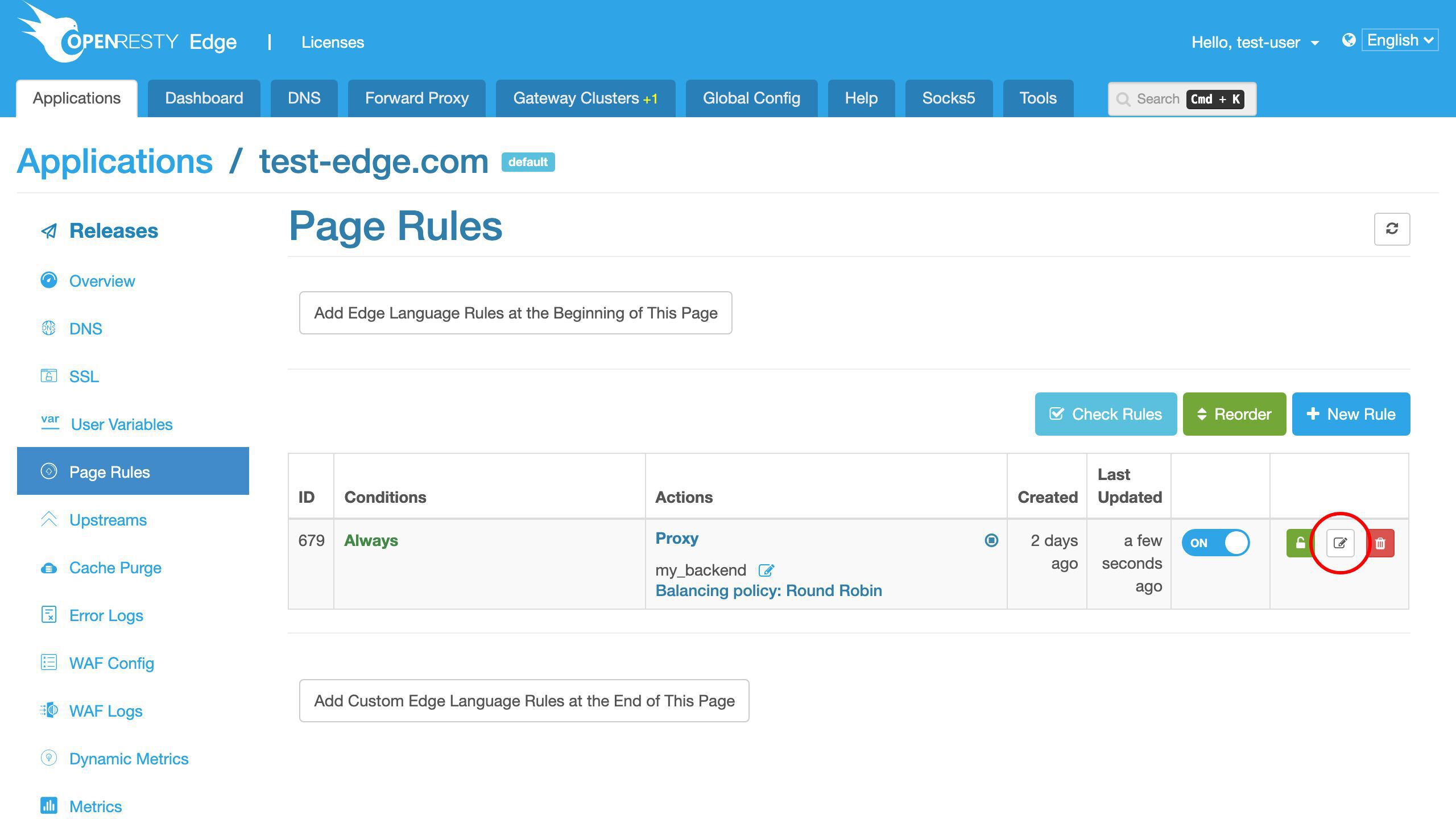

Now let’s edit this page rule to add response caching in the gateway.

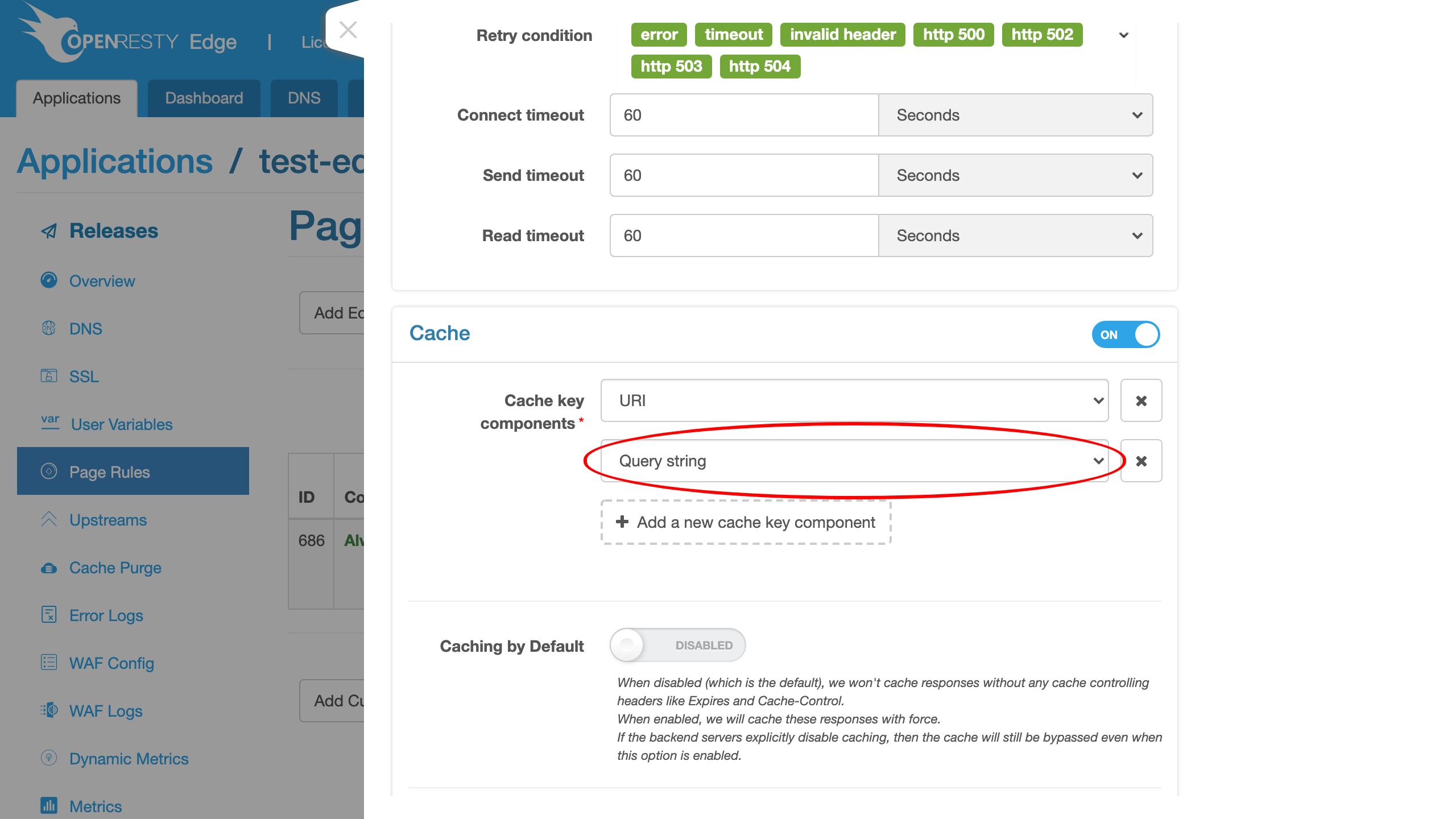

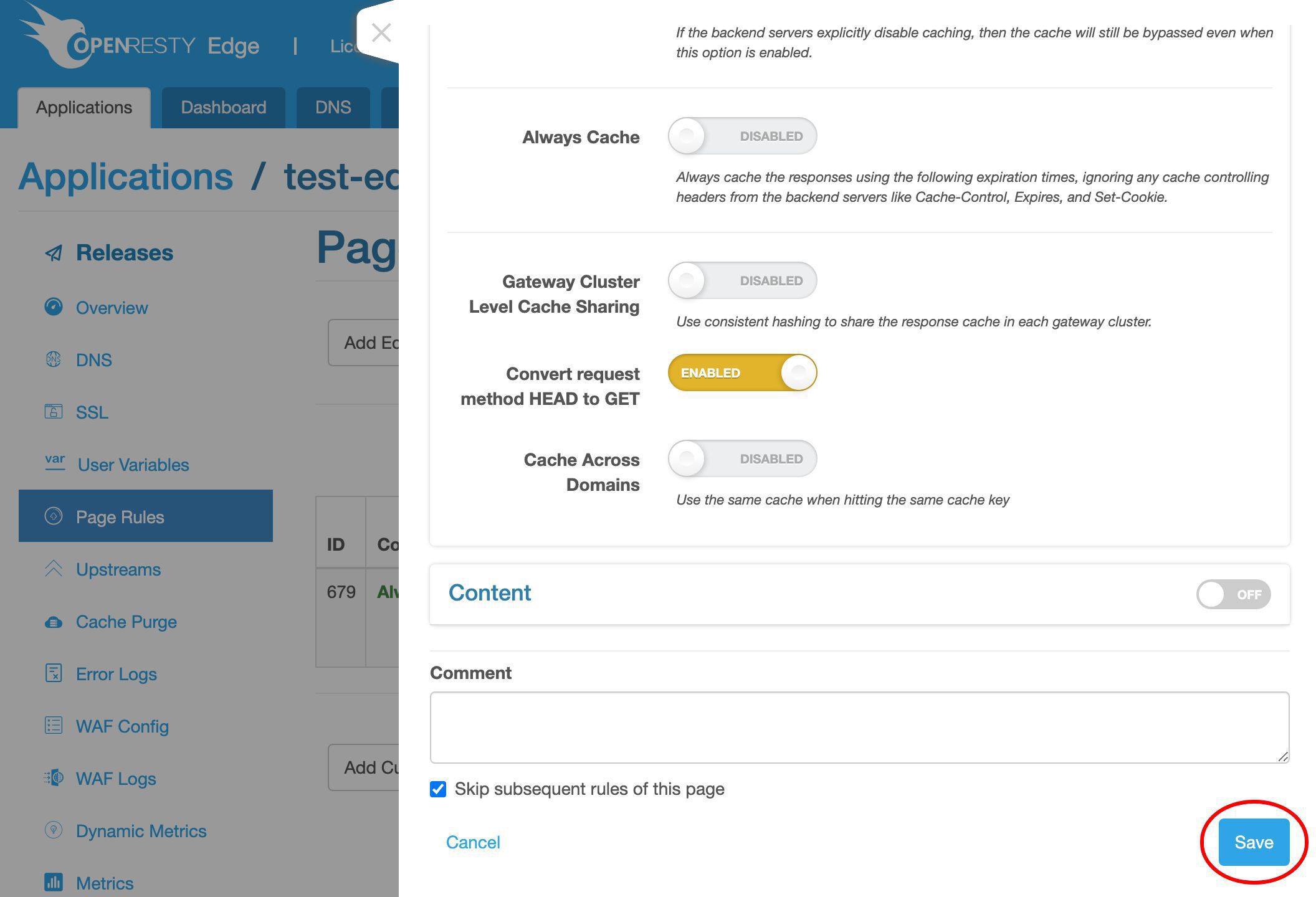

Let’s enable the proxy cache.

Here we can configure the cache key.

By default, the cache key consists of two components: the URI, and the query string.

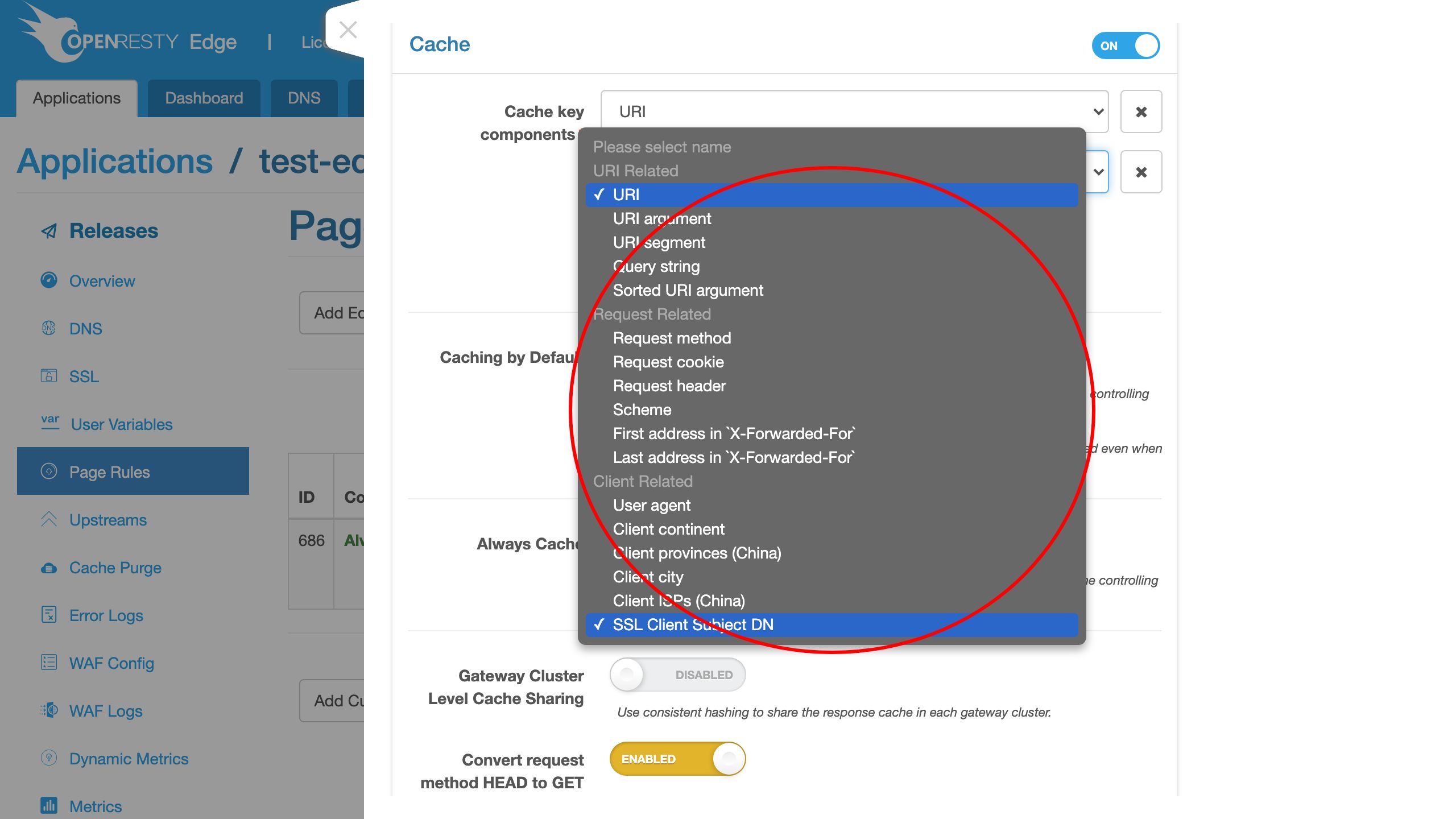

You can choose to use other kinds of key components.

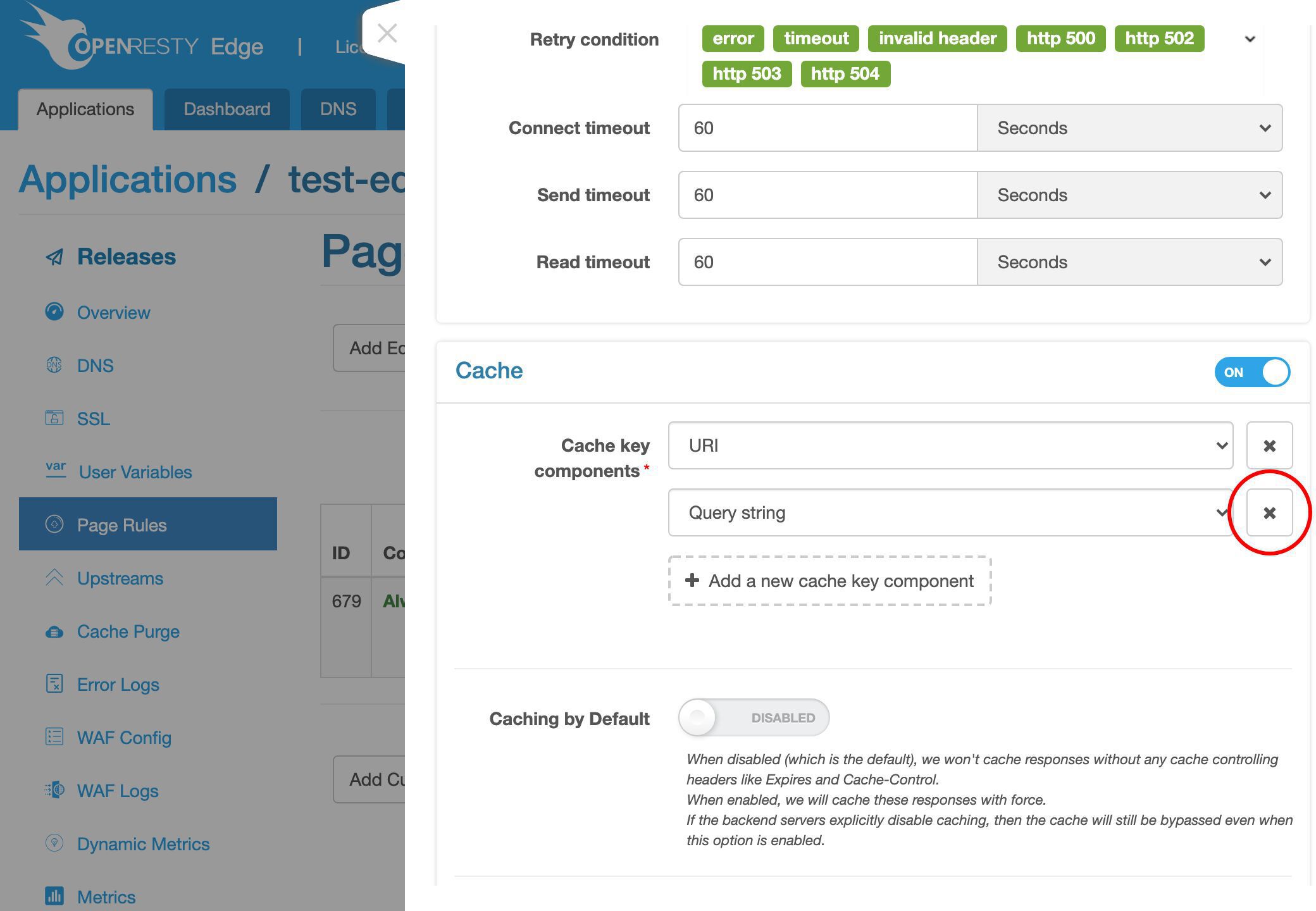

Or we can remove the whole query string from the key.

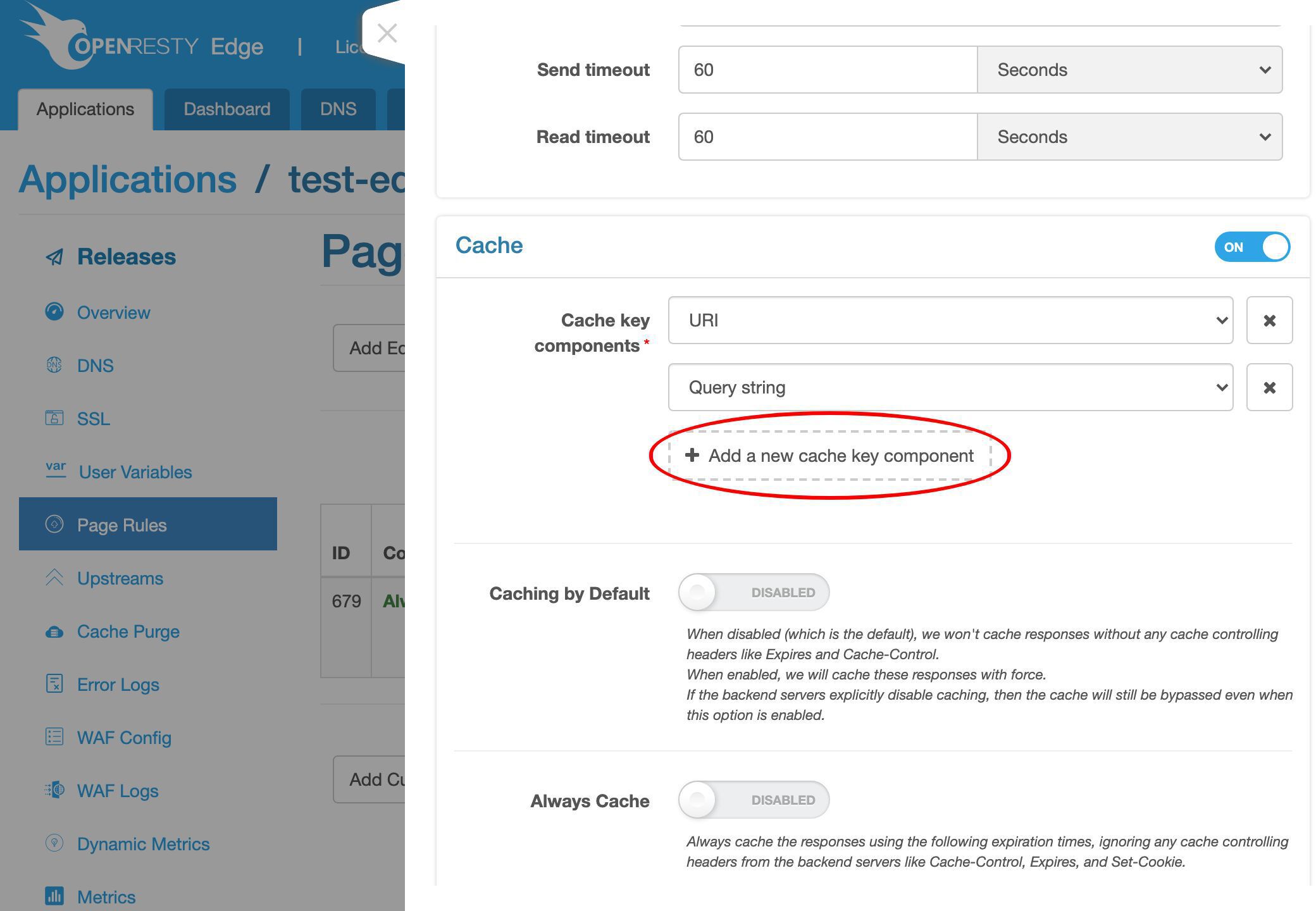

We can also add even more key components.For this example, we’ll just keep the default cache key.

Save this page rule.

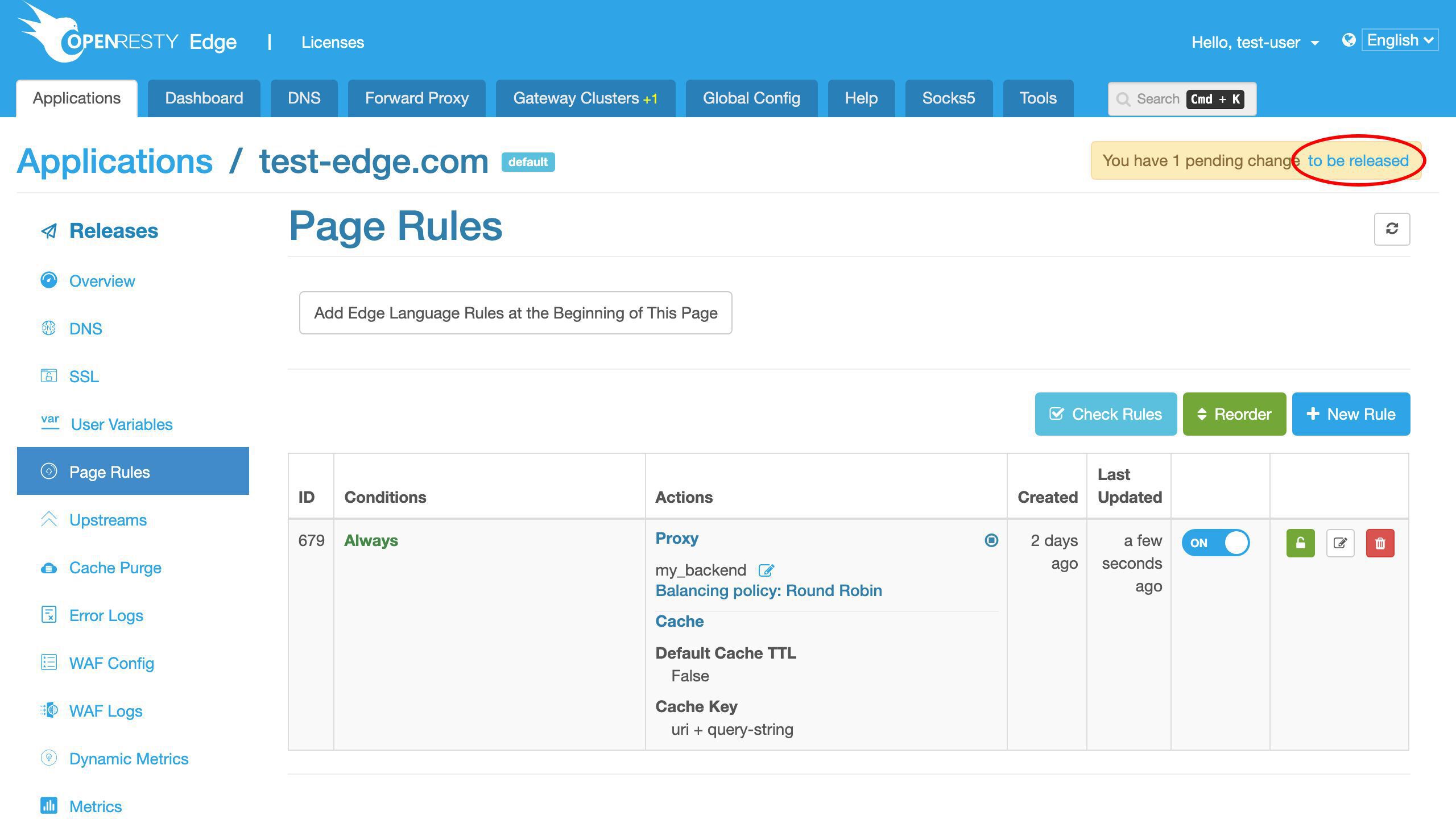

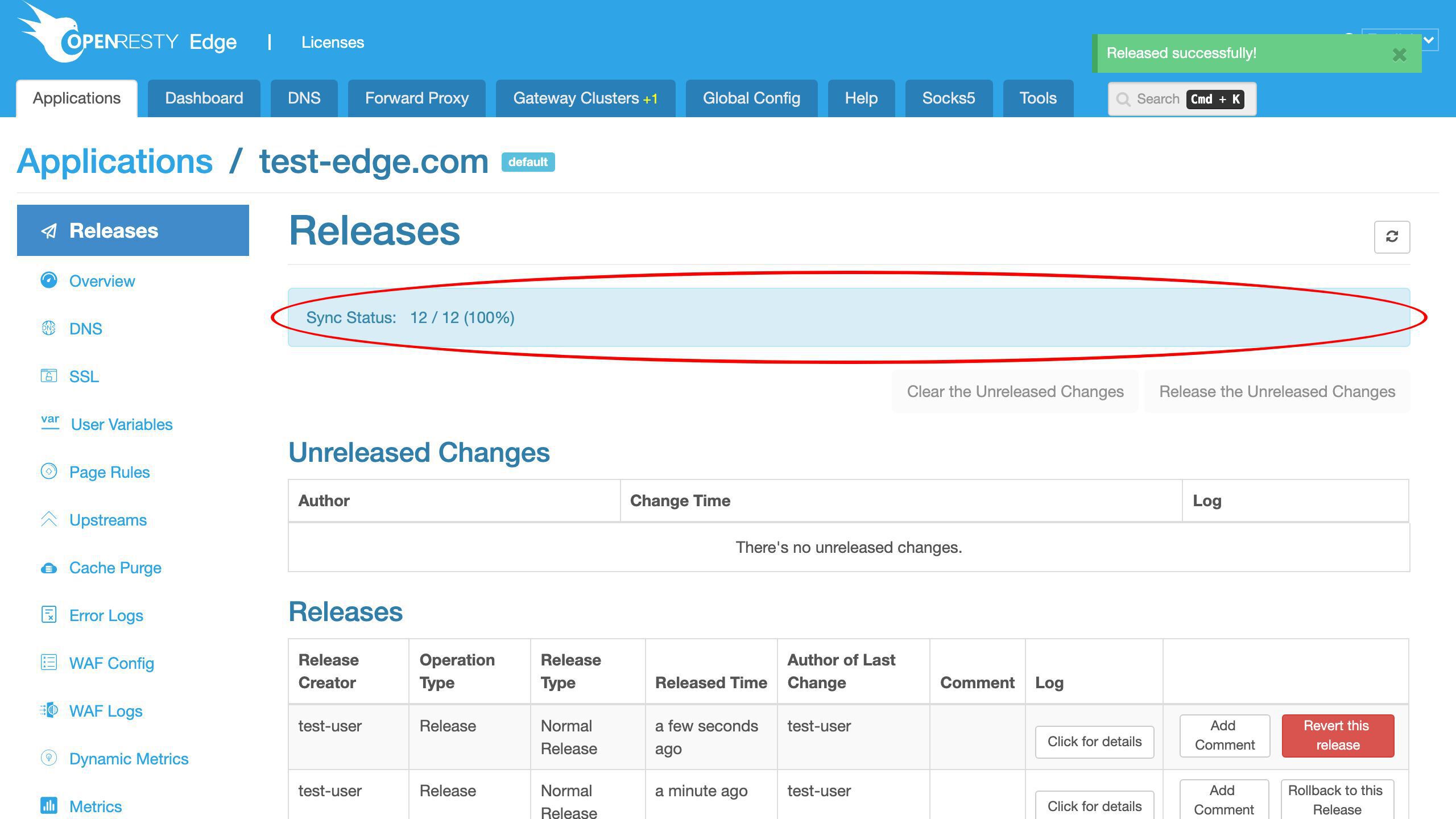

Let’s make a new release.

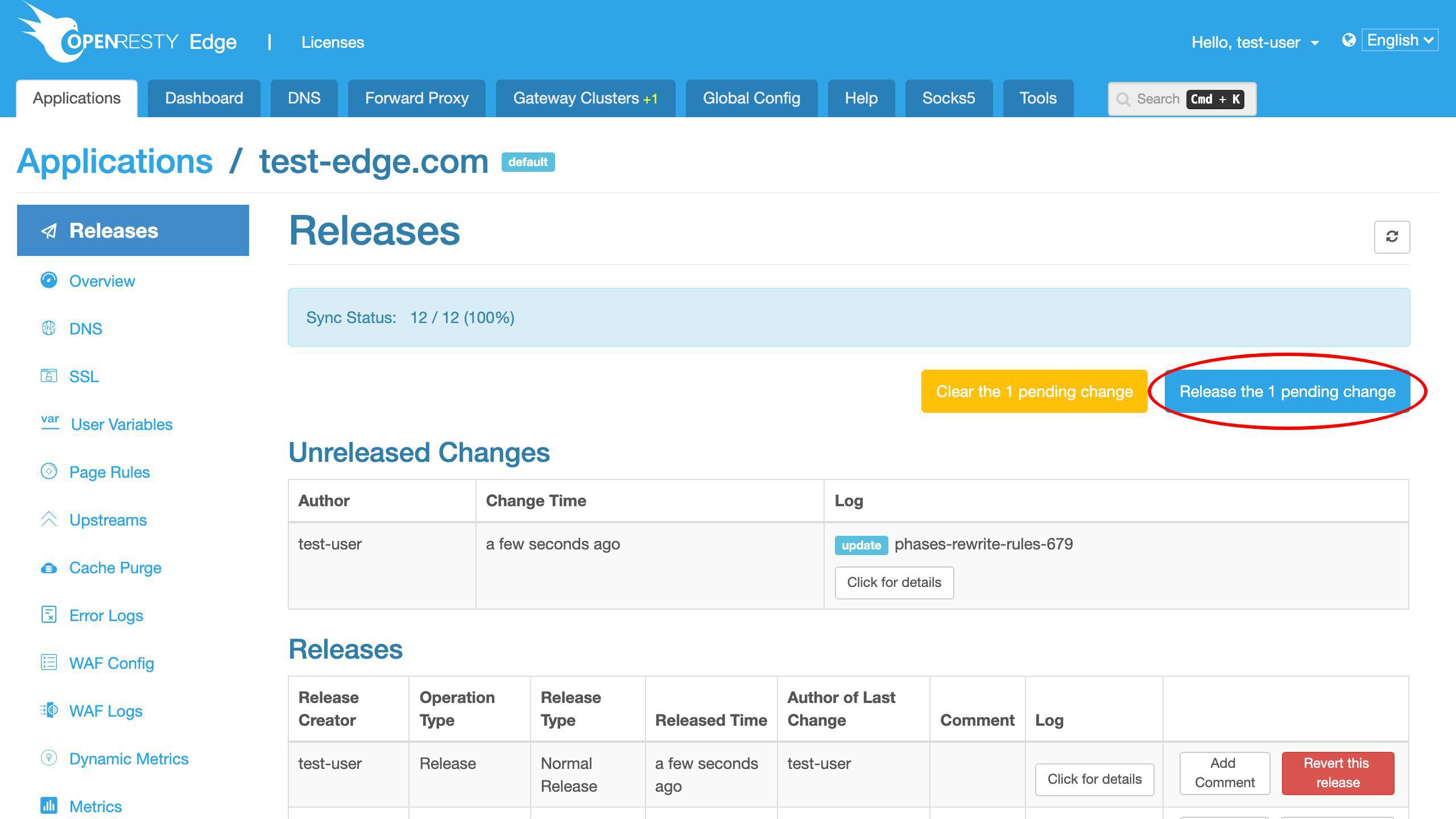

Push out our pending changes.

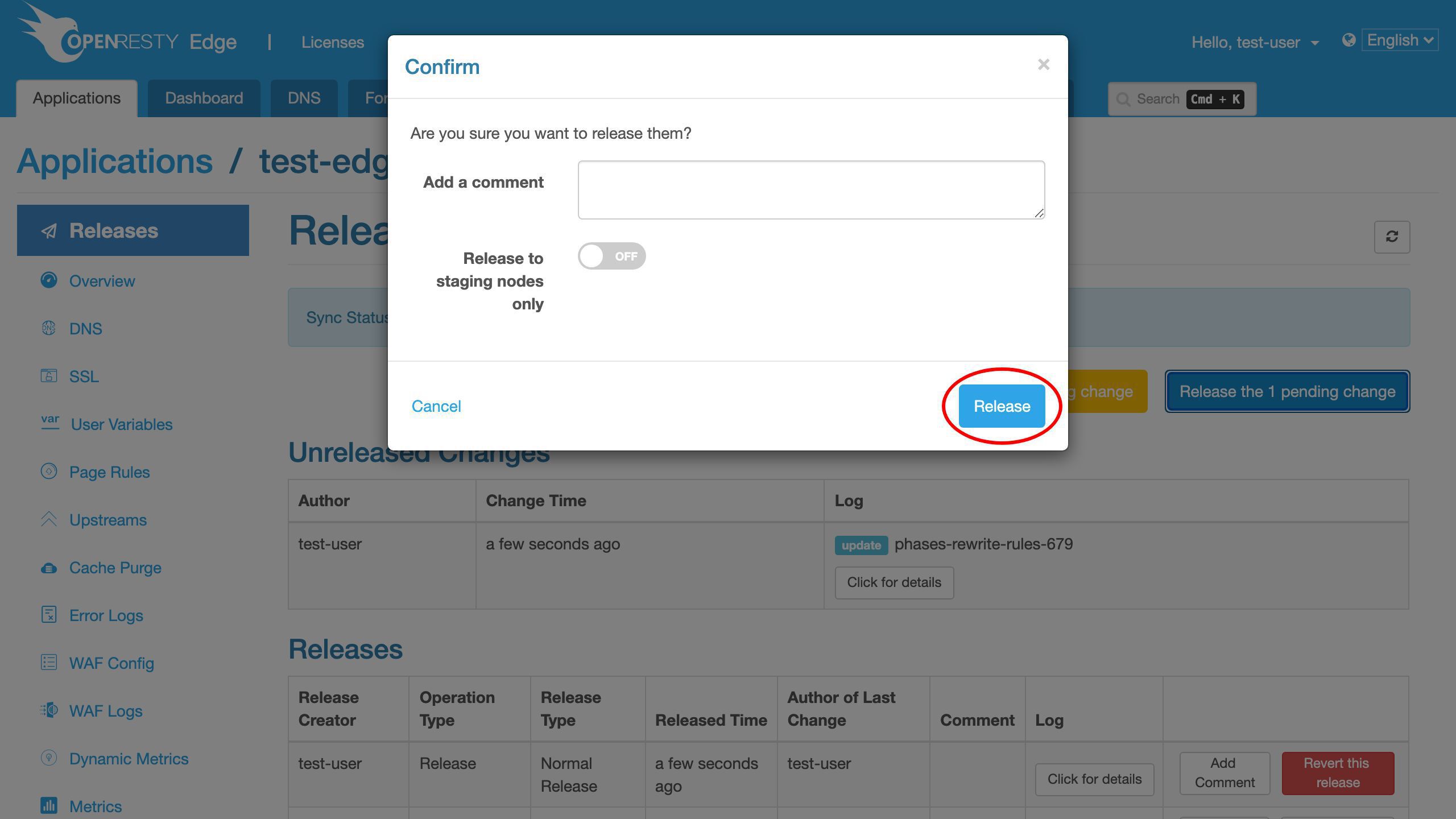

Ship it!

Our new release is now synchronized to all our gateway servers.

Our configuration changes do NOT require server reload, restart, or binary upgrade. So it’s very efficient.

Test the response caching

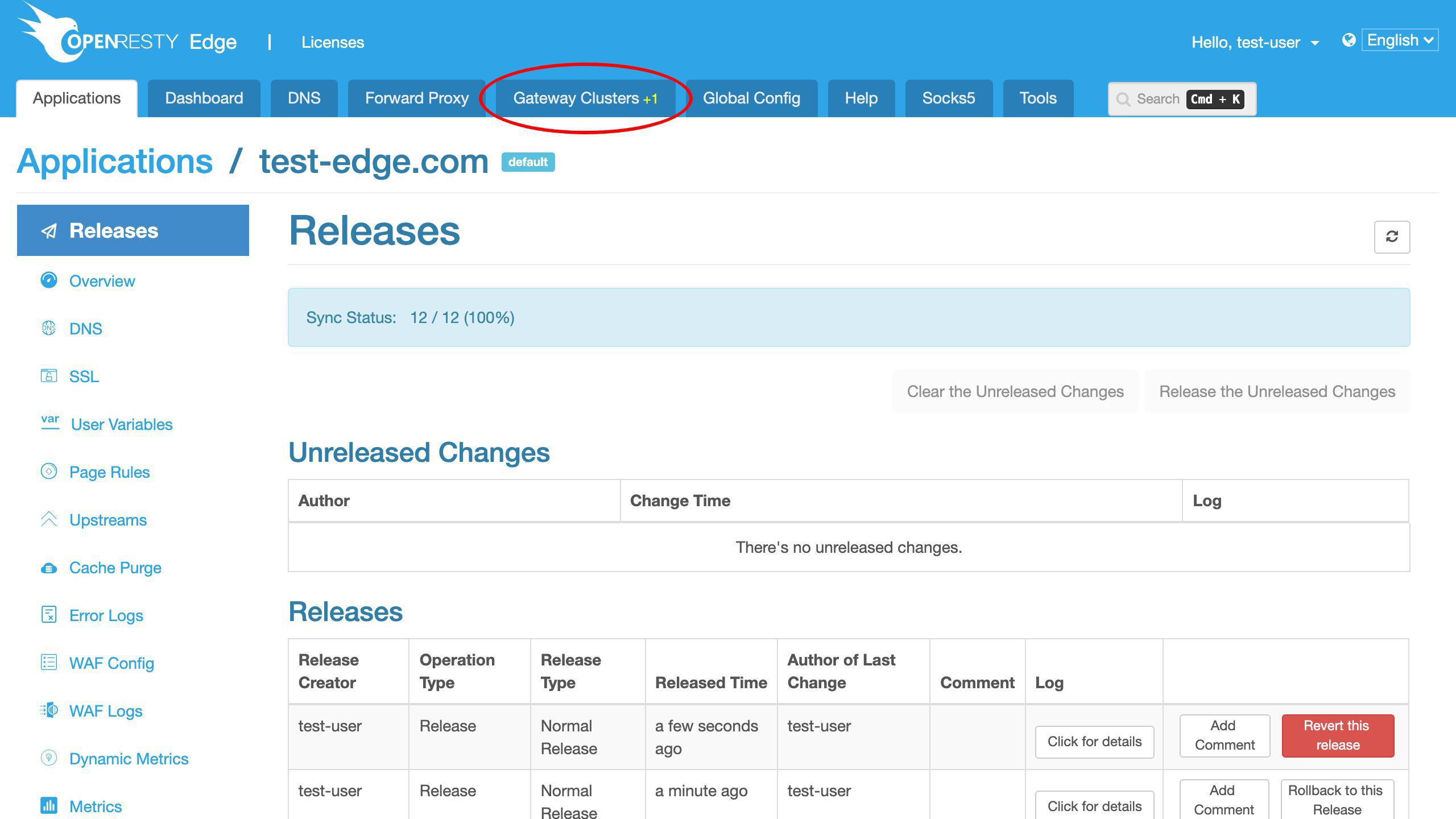

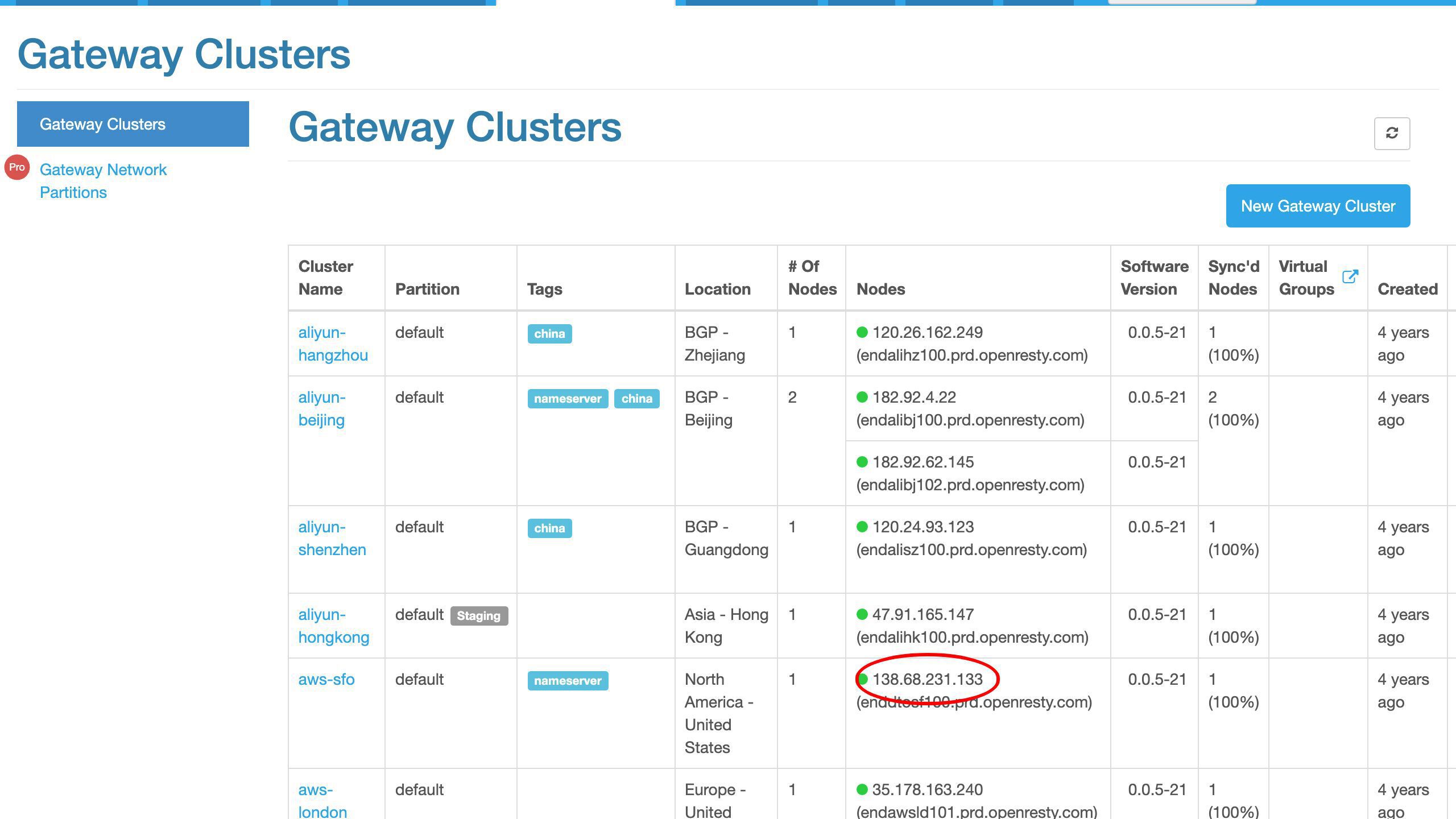

Let’s test a gateway server for caching.

We copy the IP address of this San Francisco gateway server.

Note the last number of this IP address is 133.

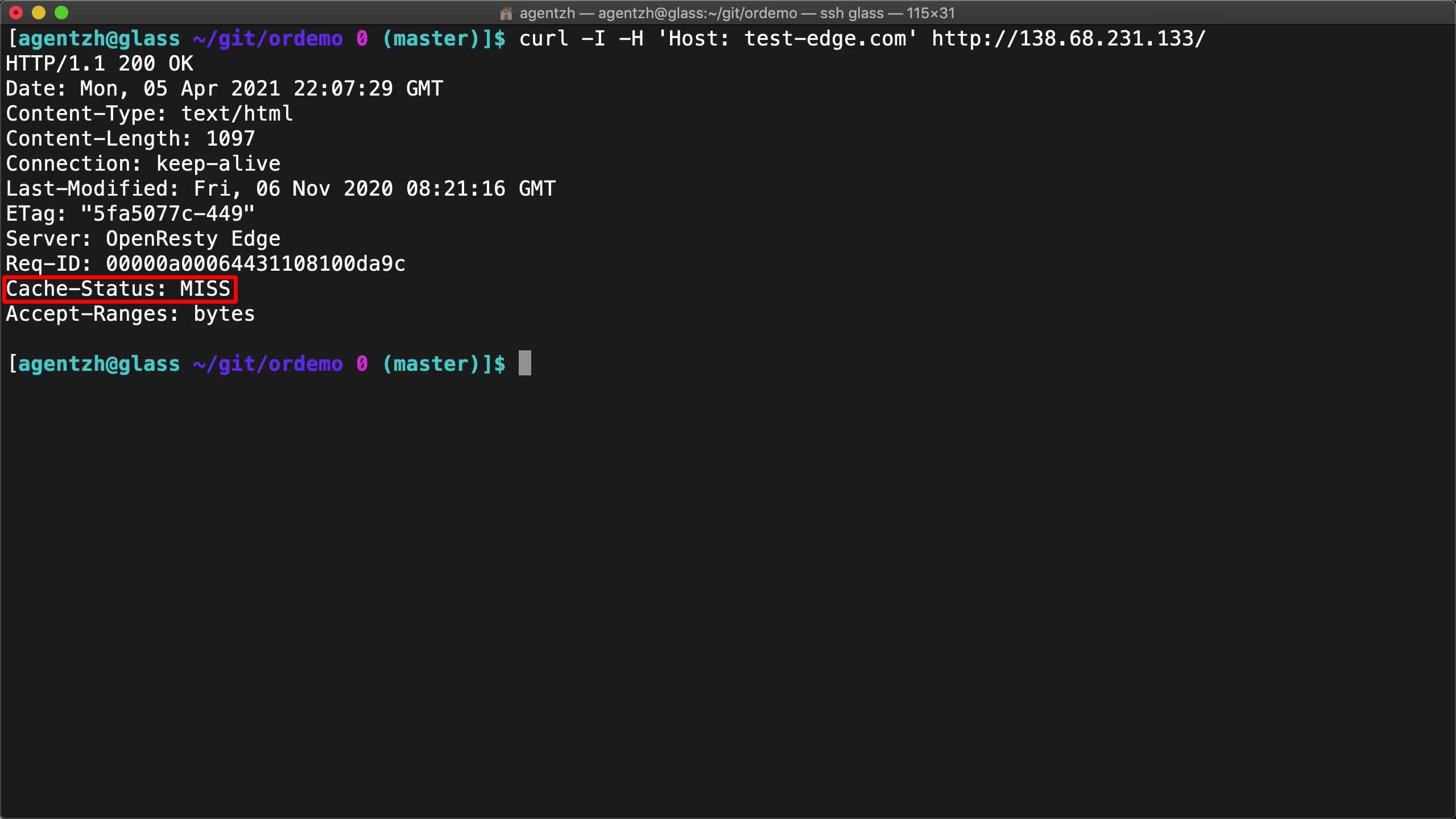

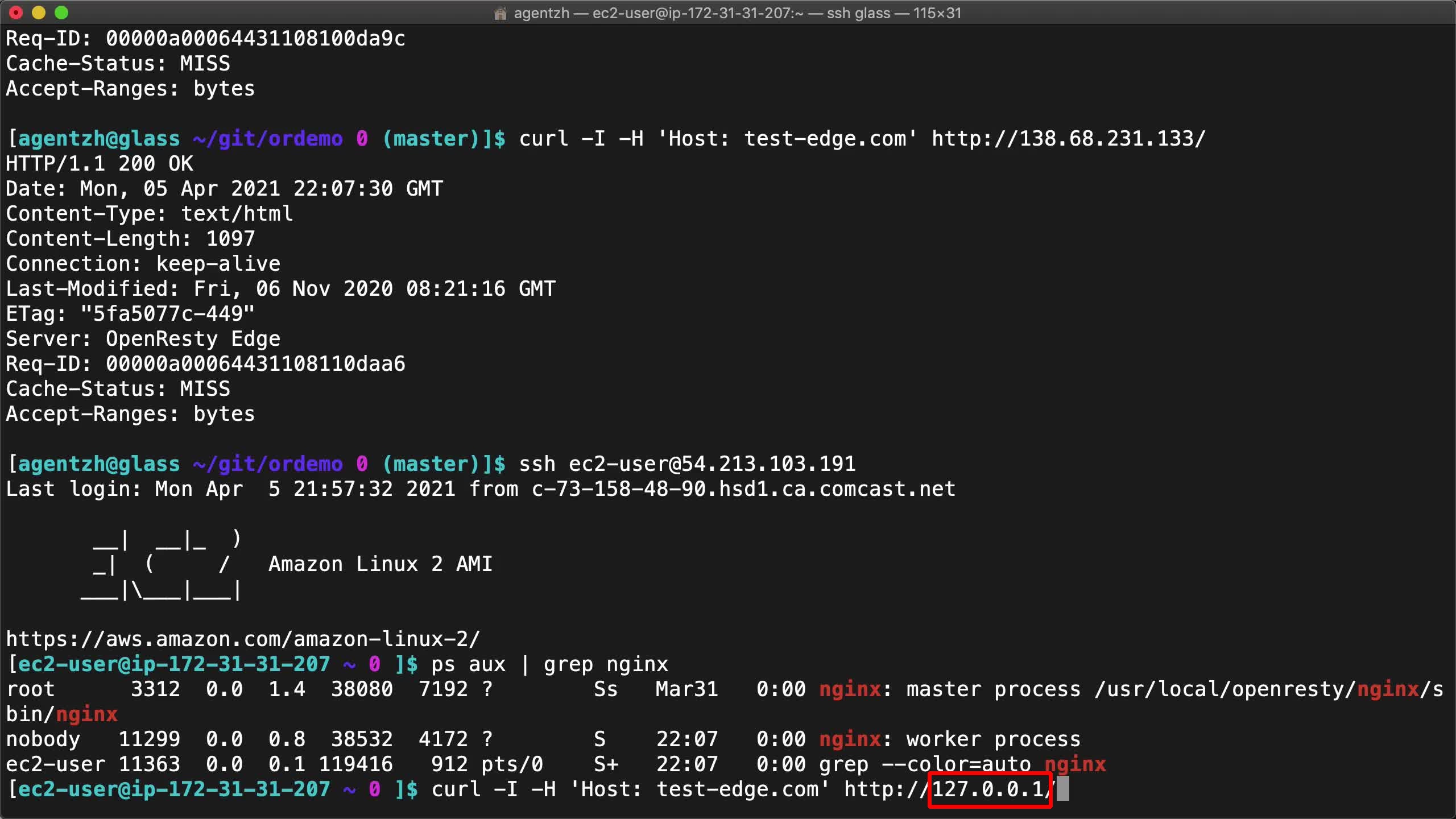

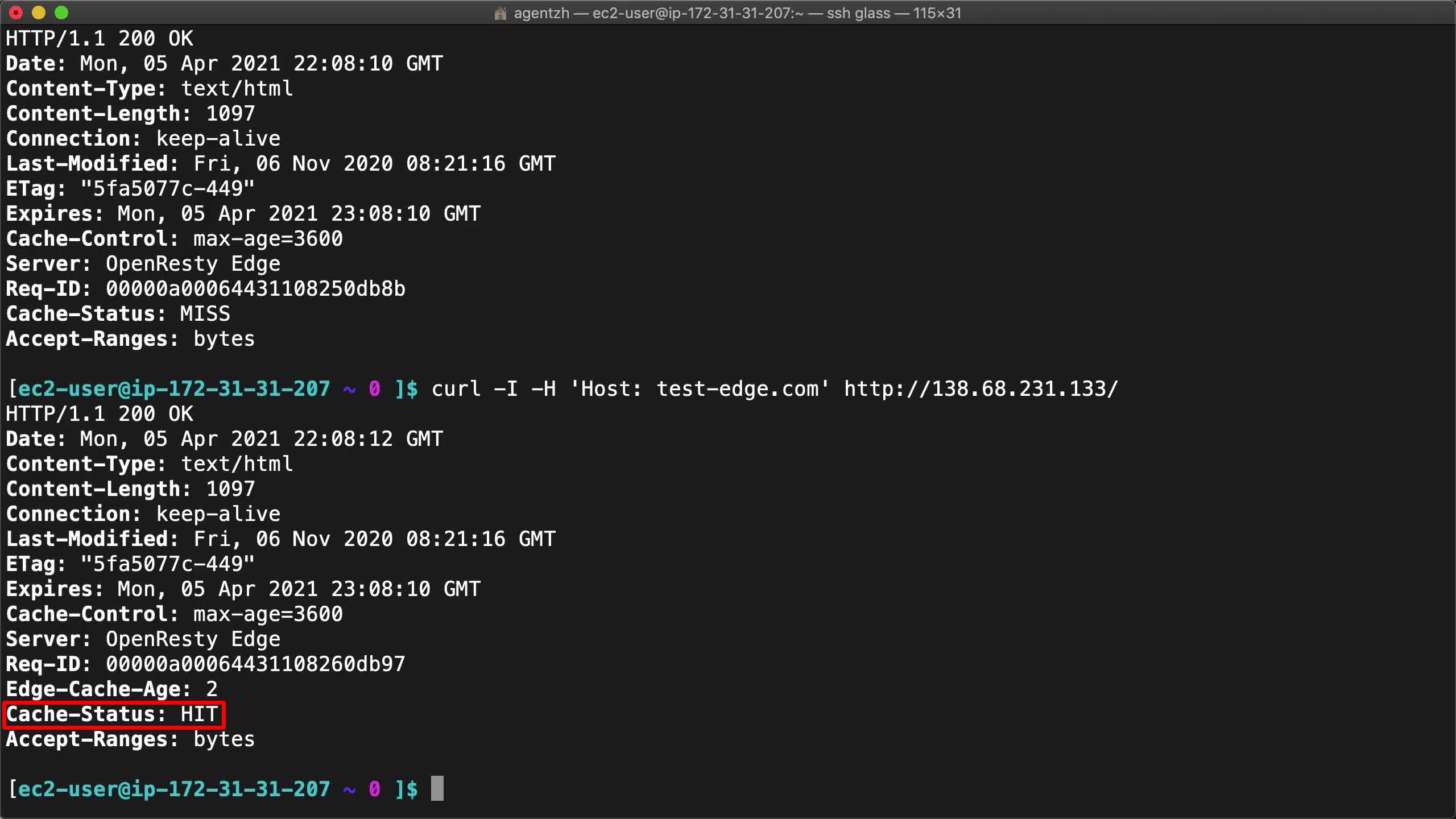

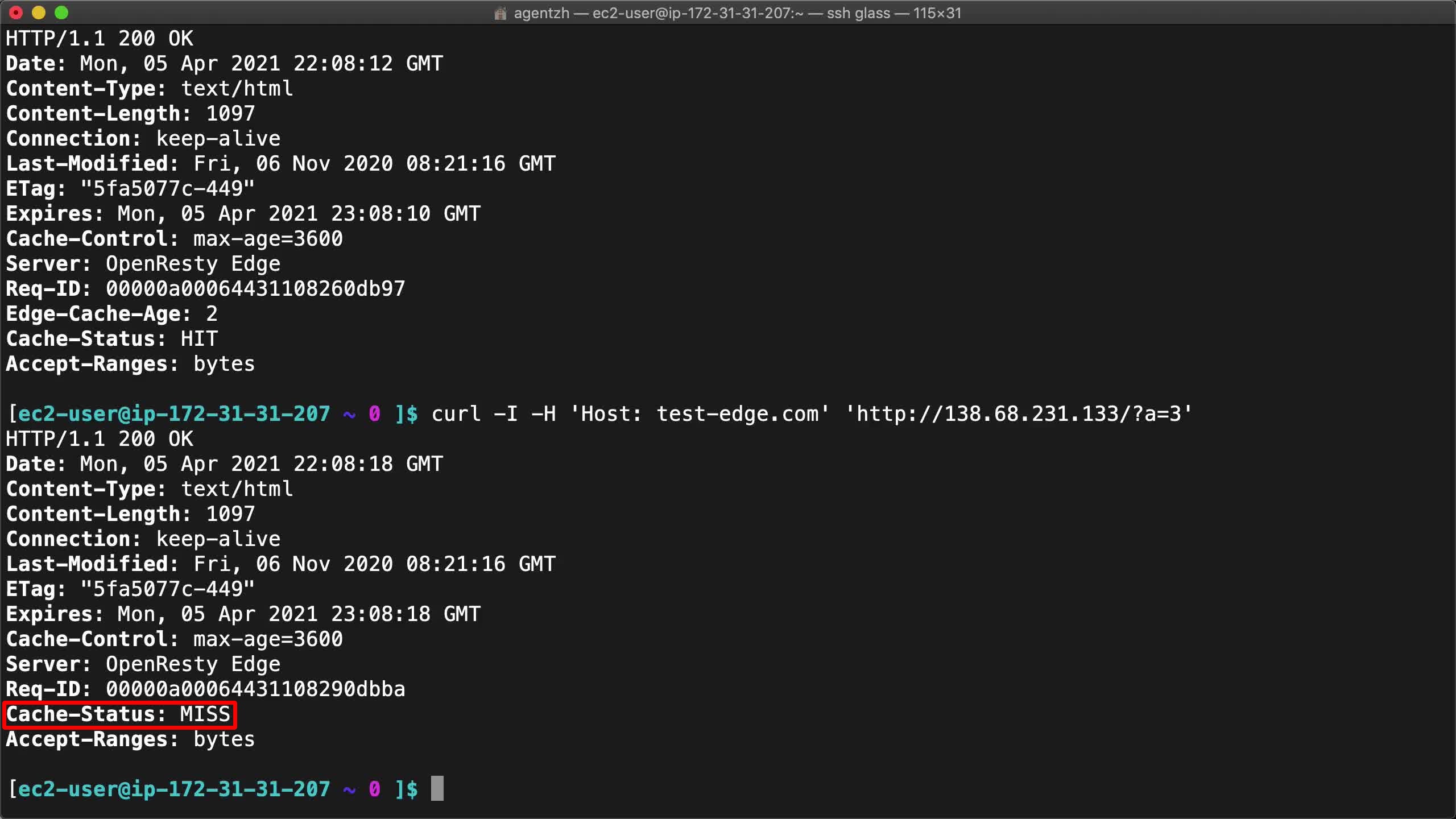

On the terminal, we send an HTTP request to this gateway server via the curl command-line utility.

curl -I -H 'Host: test-edge.com' http://138.68.231.133/

Note the Cache-Status: MISS response header returned.

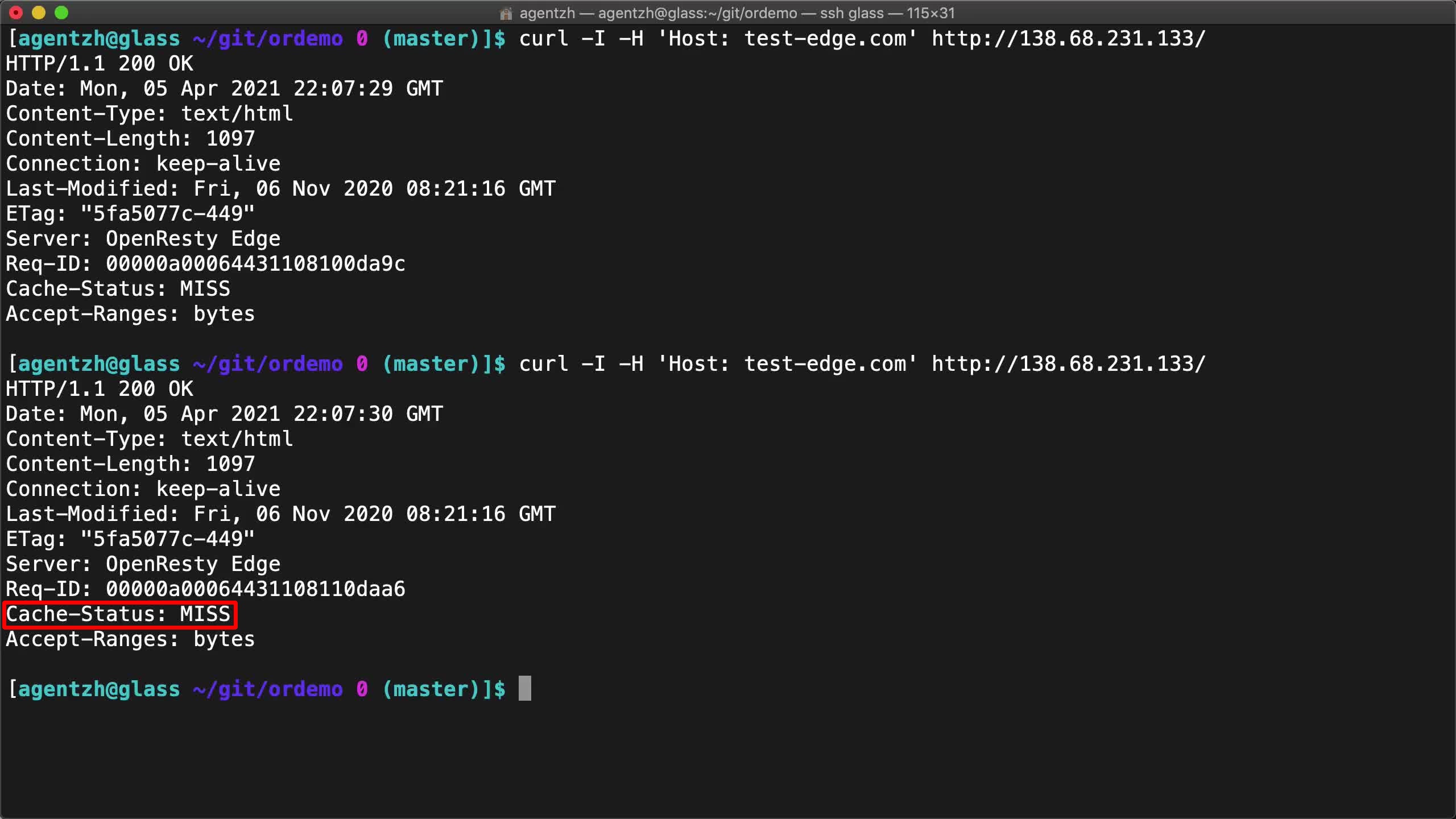

Do it again.

curl -I -H 'Host: test-edge.com' http://138.68.231.133/

We are still getting the Cache-Status: MISS header. It means that the cache is not used at all. Why? Because the original response header from the backend server lacks headers like Expires and Cache-Control.

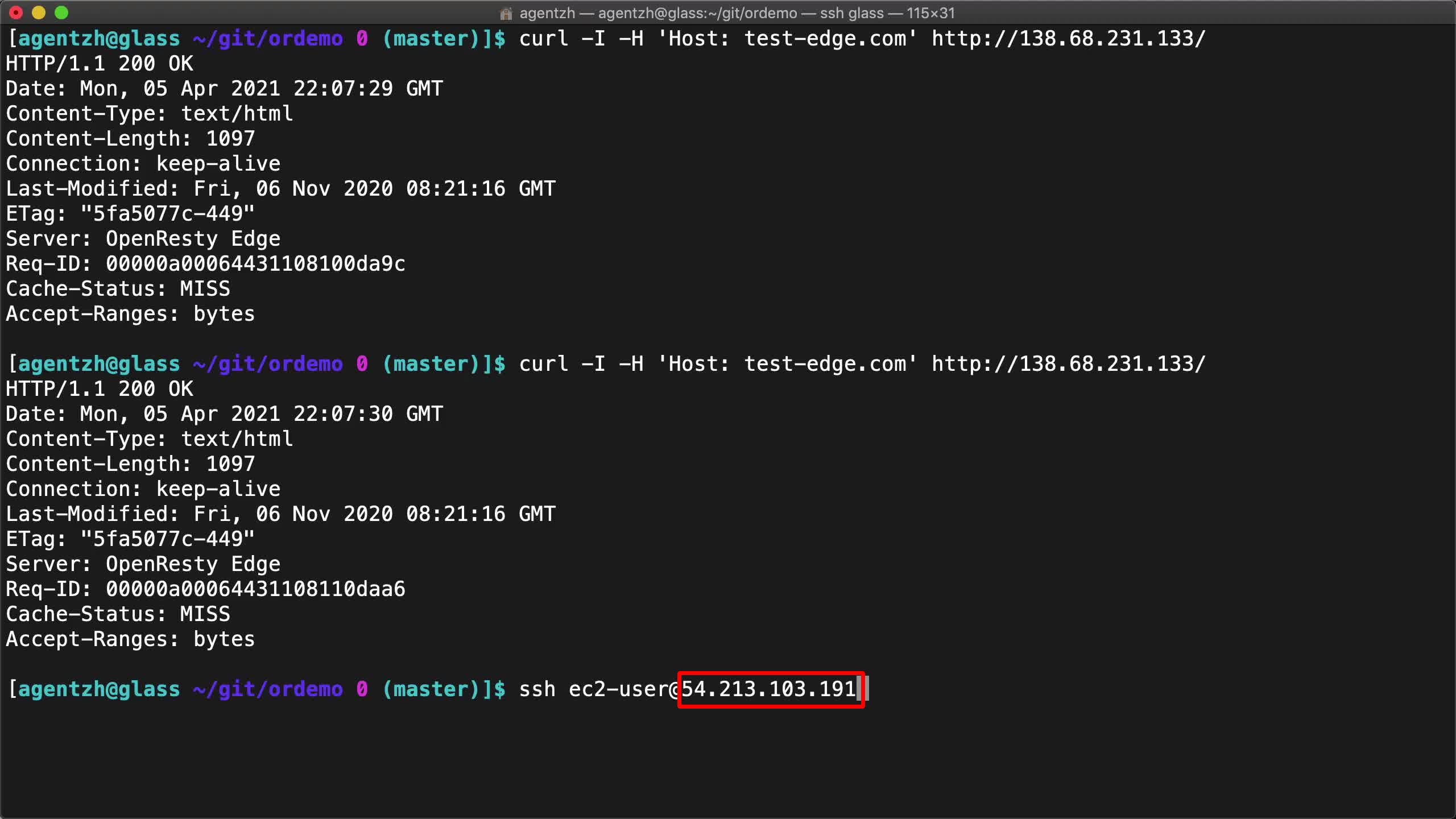

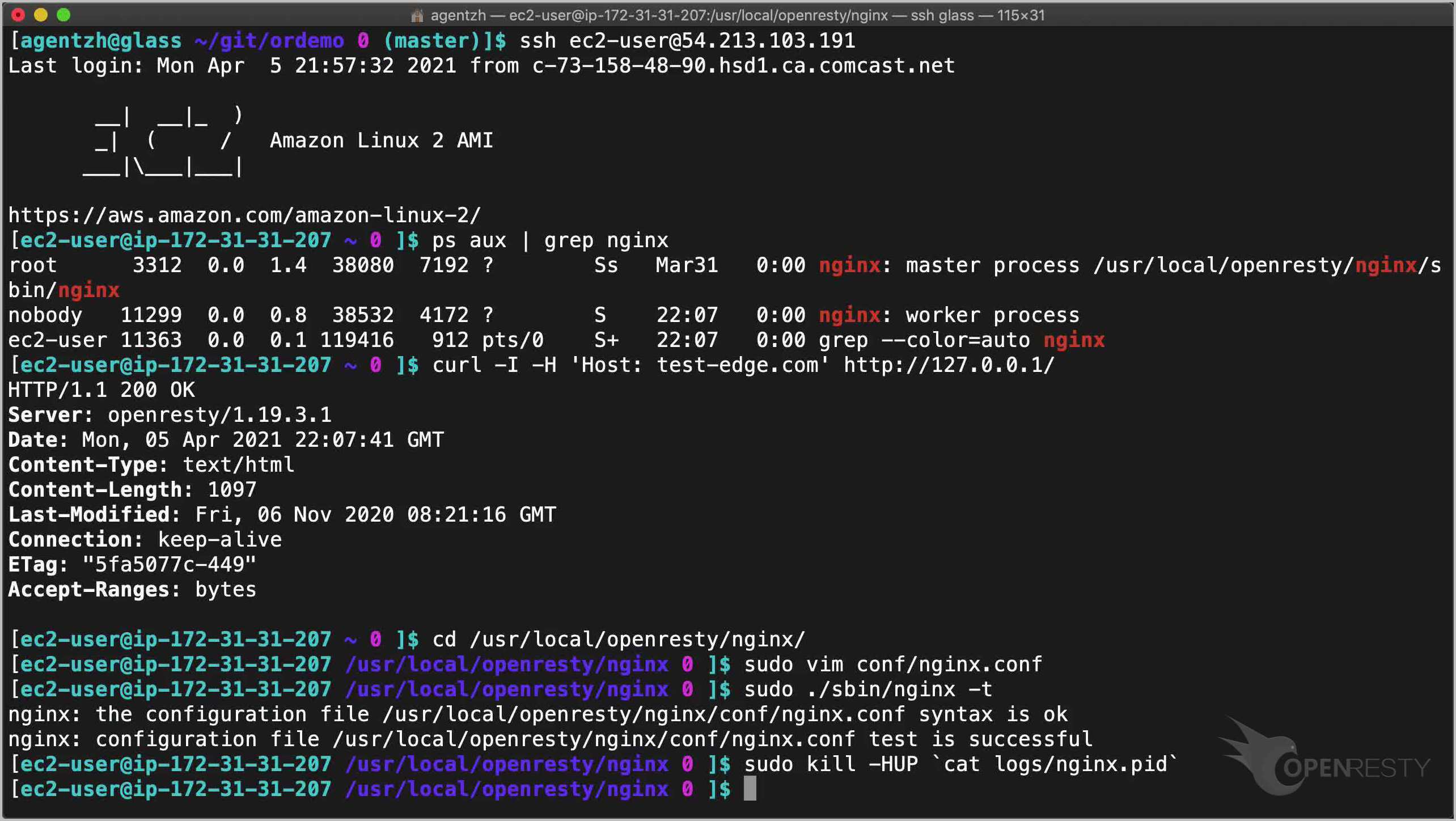

We can log onto the backend server.

ssh ec2-user@54.213.103.191

Recall that the backend server’s IP address ends with 191.

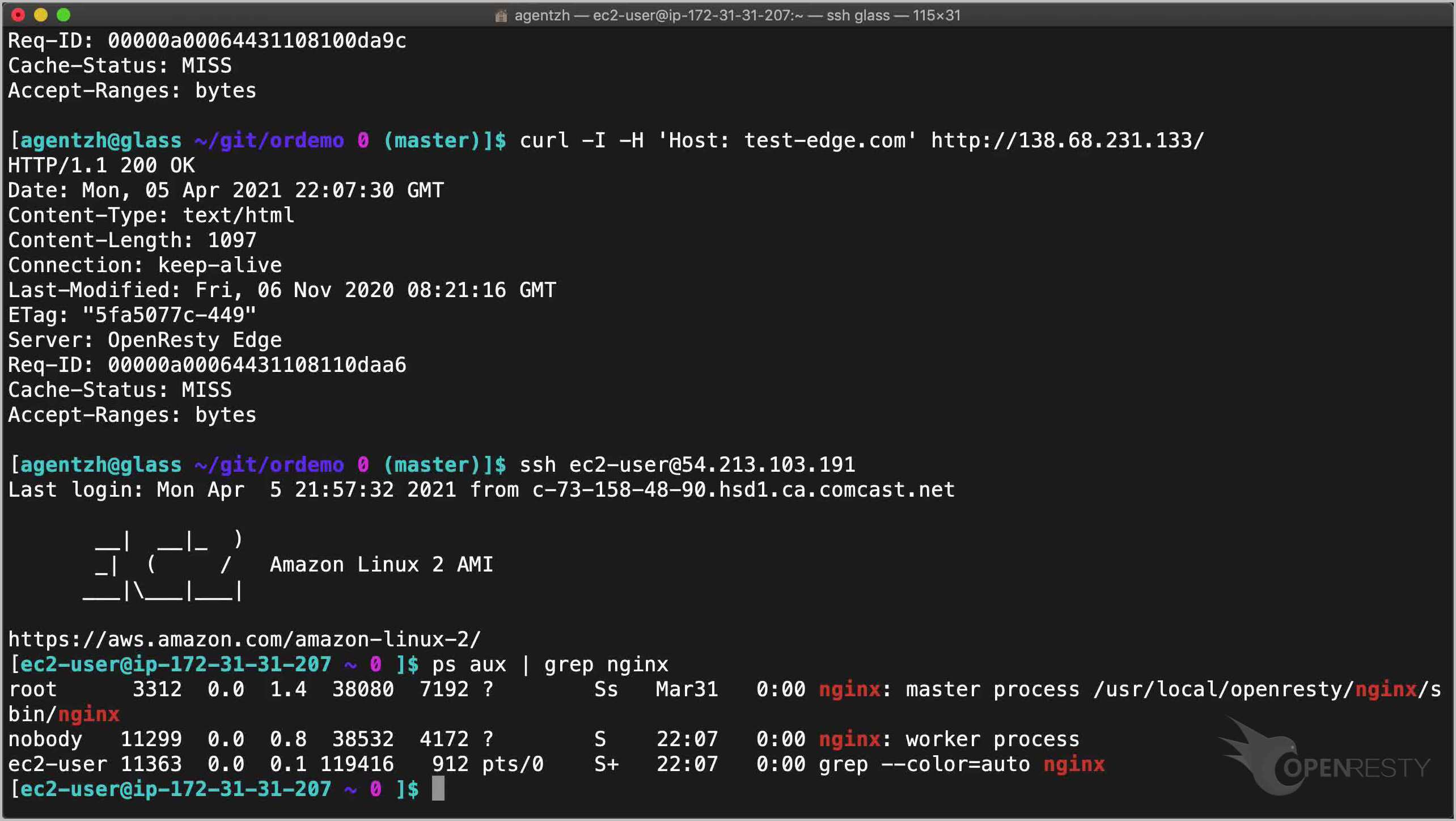

This backend server runs the open source OpenResty software.

ps aux | grep nginx

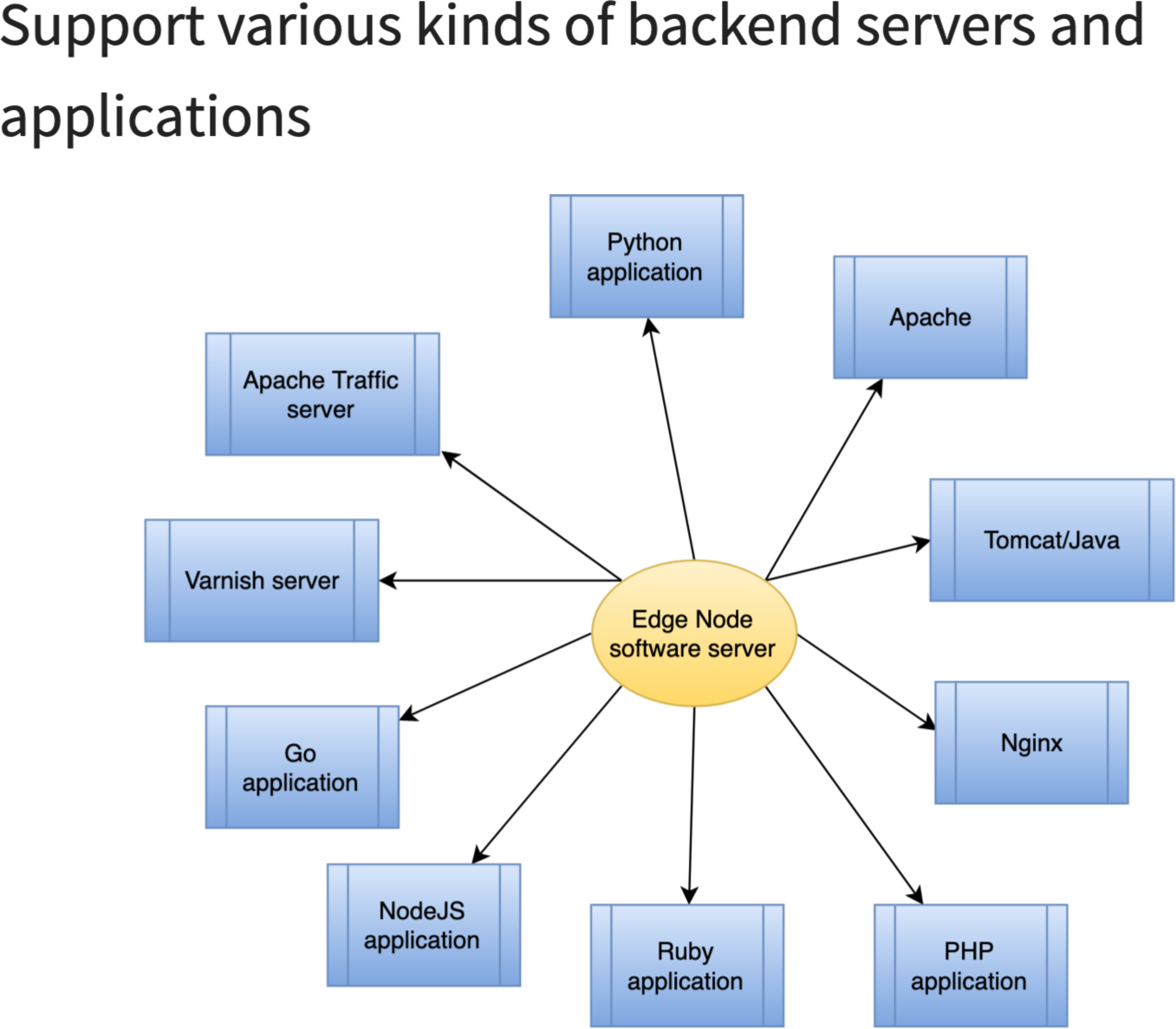

The backend server may run any other software that speaks HTTP.

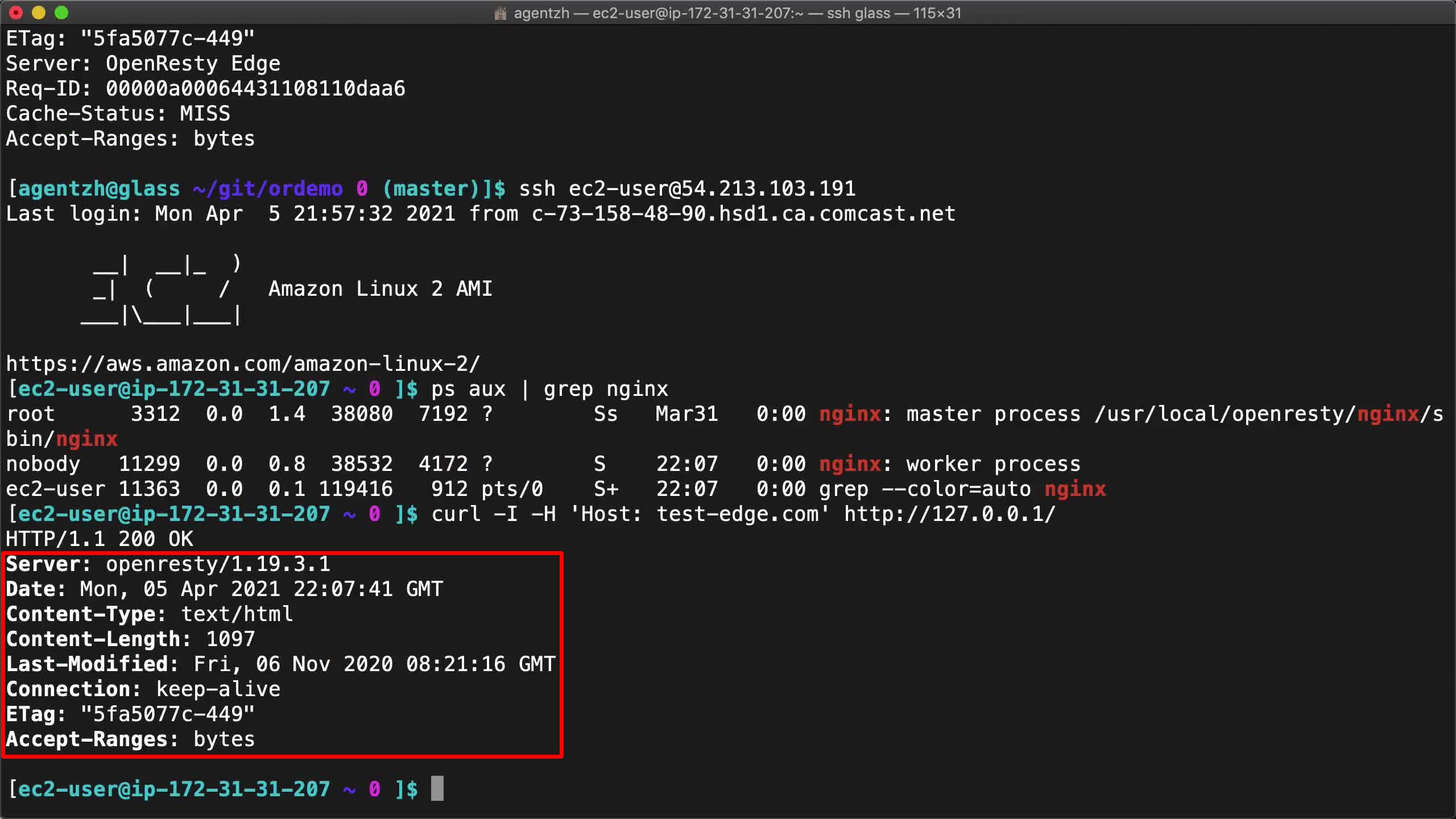

We can send a test request to this backend server directly.

curl -I -H 'Host: test-edge.com' http://127.0.0.1/

Note that we are accessing the local host.

Okay, it indeed does not provide any Expires or Cache-Control headers.

Now let’s re-configure our backend server.

cd /usr/local/openresty/nginx/

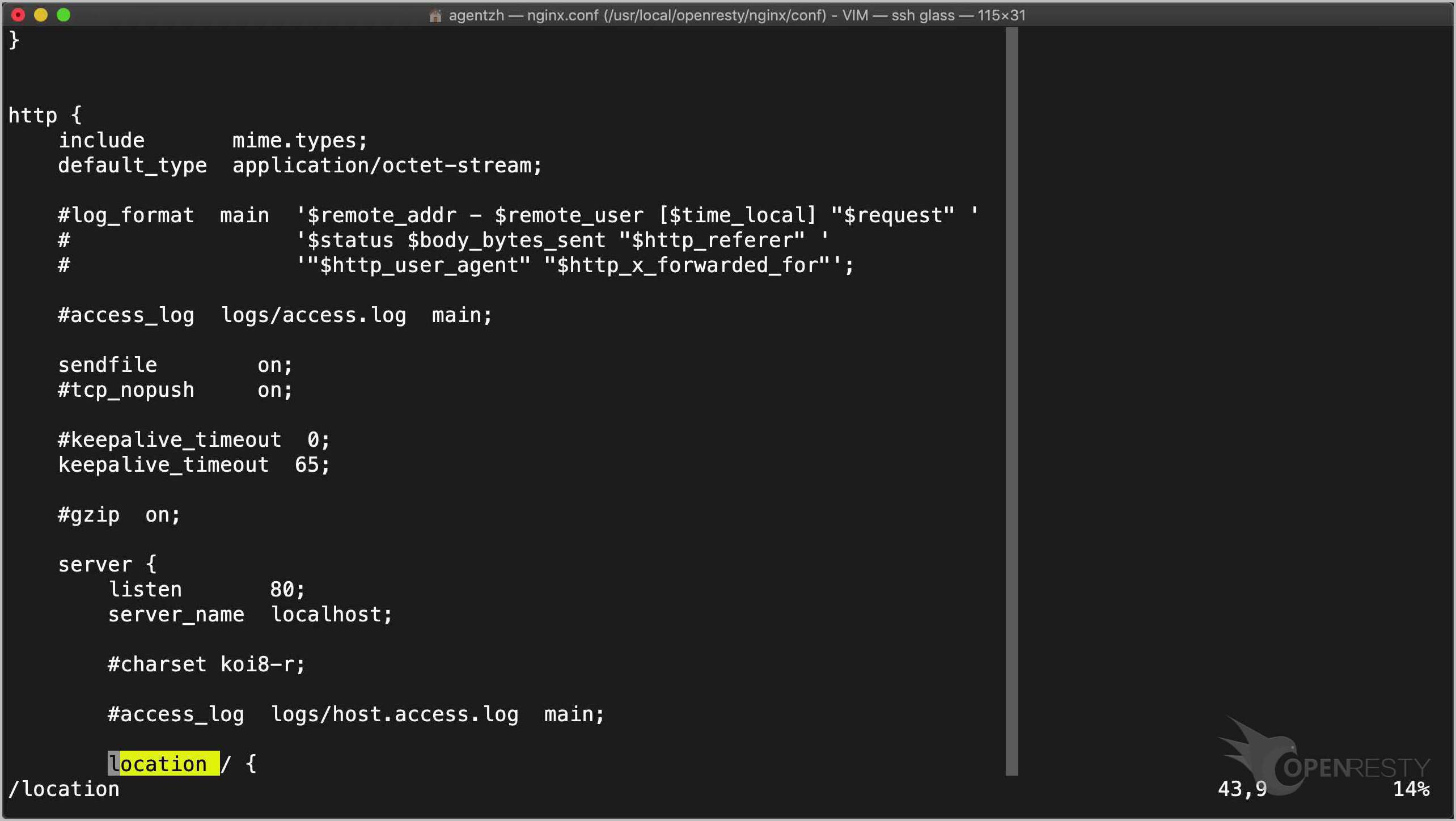

Open the nginx configuration file.

sudo vim conf/nginx.conf

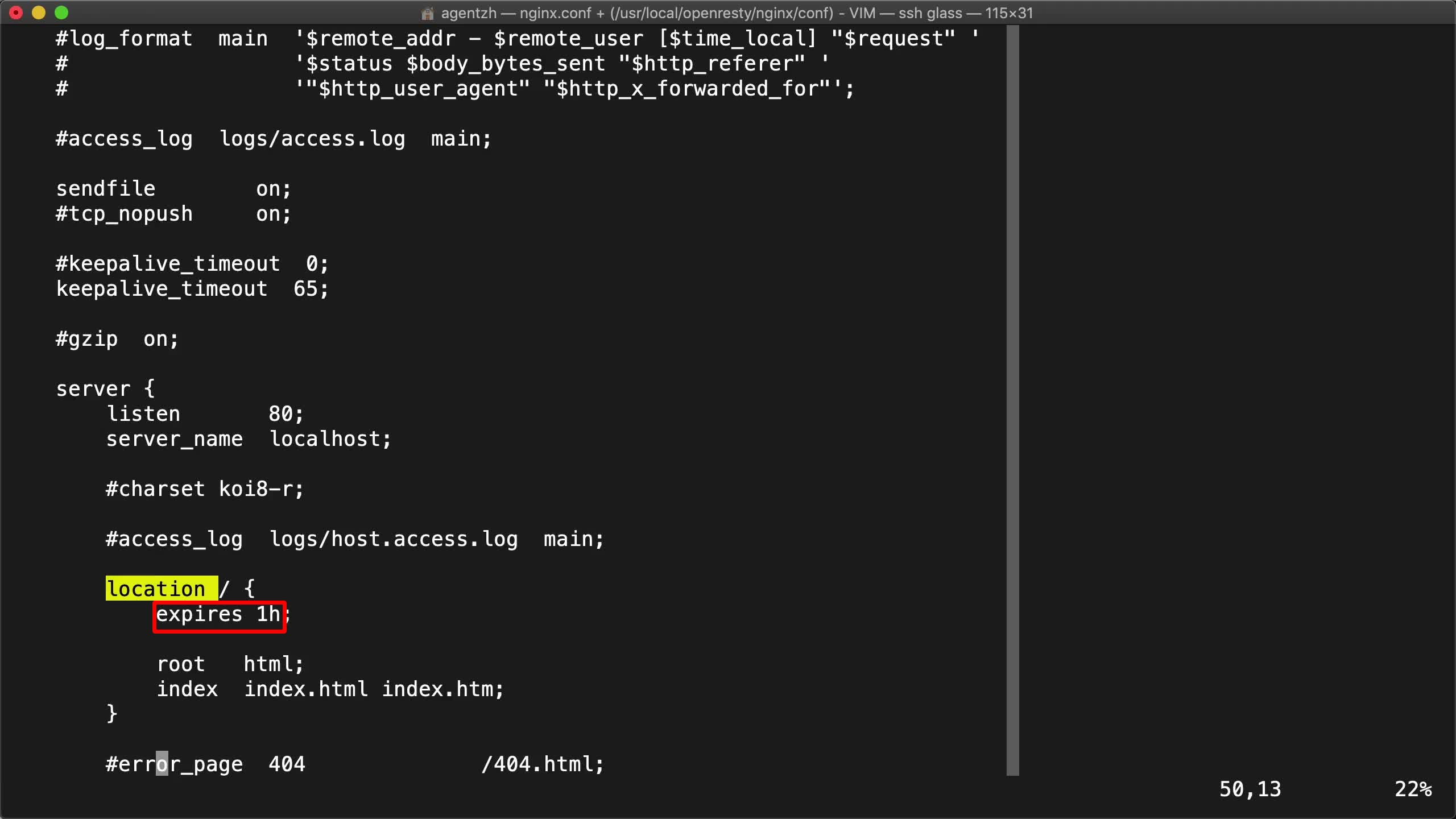

Find our root location, location /.

And add an expiration time of 1 hour.

expires 1h;

Note that the backend server can define different expiration times for different locations. Or disable the cache completely for certain responses.

Save and quit the file.

Test if the nginx configuration file is correct.

sudo ./sbin/nginx -t

Good.

Now reload the server.

sudo kill -HUP `cat logs/nginx.pid`

Note that for open source Nginx servers, the configuration is also the same.

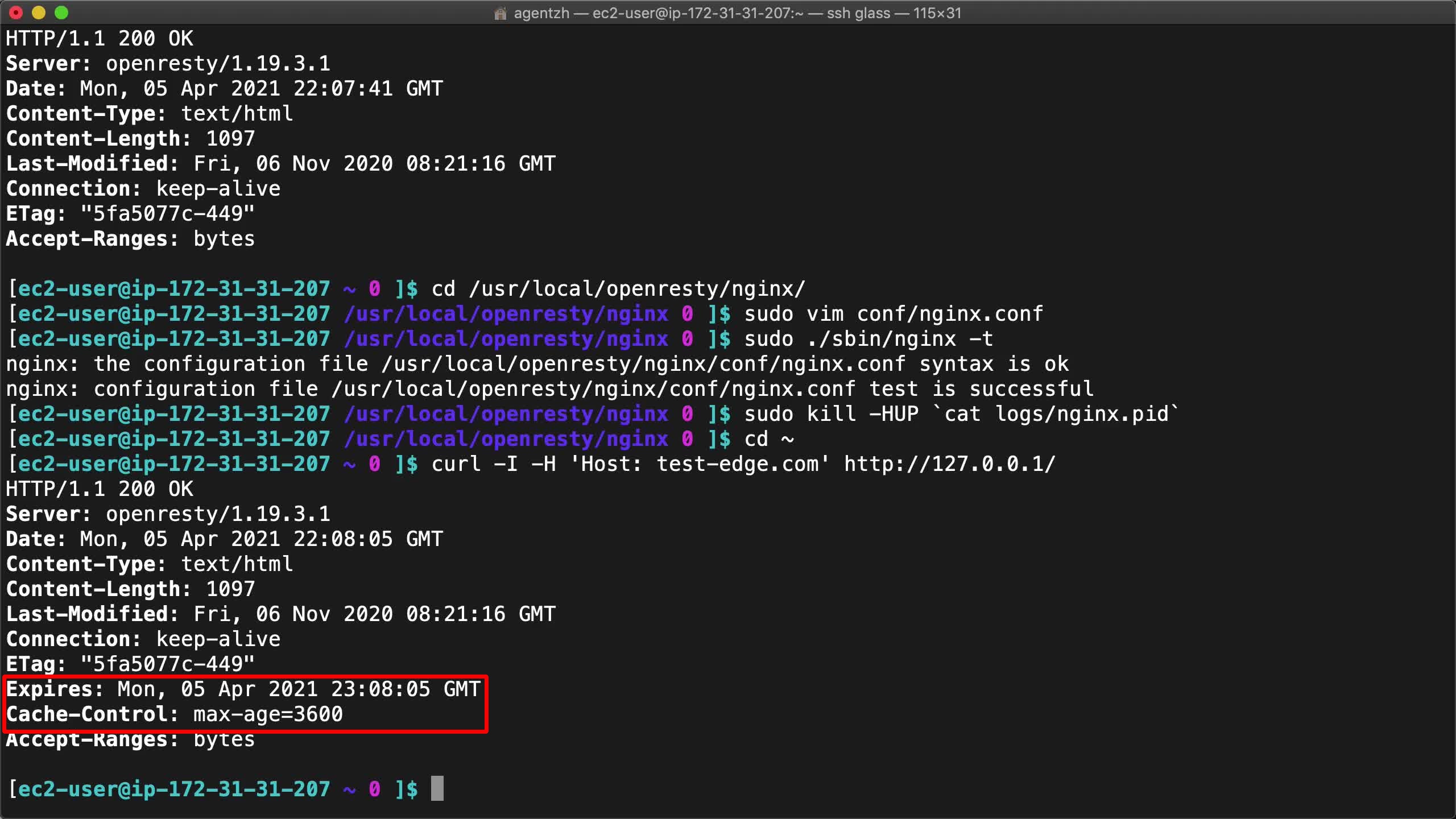

Time to test the backend server again.

curl -I -H 'Host: test-edge.com' http://127.0.0.1/

Yay! It responds with the Expires and Cache-Control headers now.

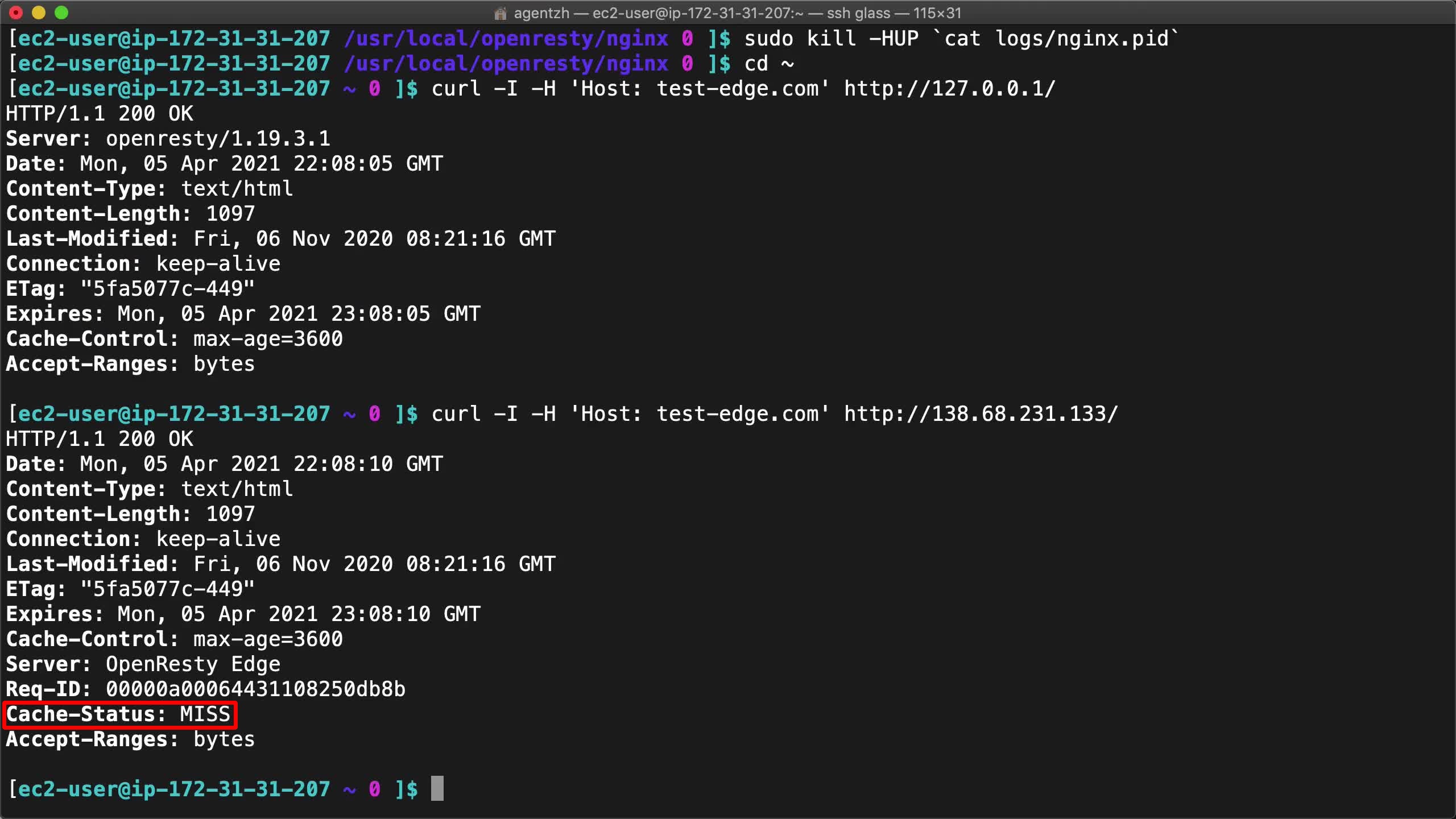

Send the request to the gateway server instead.

curl -I -H 'Host: test-edge.com' http://138.68.231.133/

It still shows the Cache-Status: MISS header.

It is an expected cache miss. Because this is our very first request.

Send the request again.

curl -I -H 'Host: test-edge.com' http://138.68.231.133/

Great! We finally see the Cache-Status: HIT header!

So it is now a cache hit as expected.

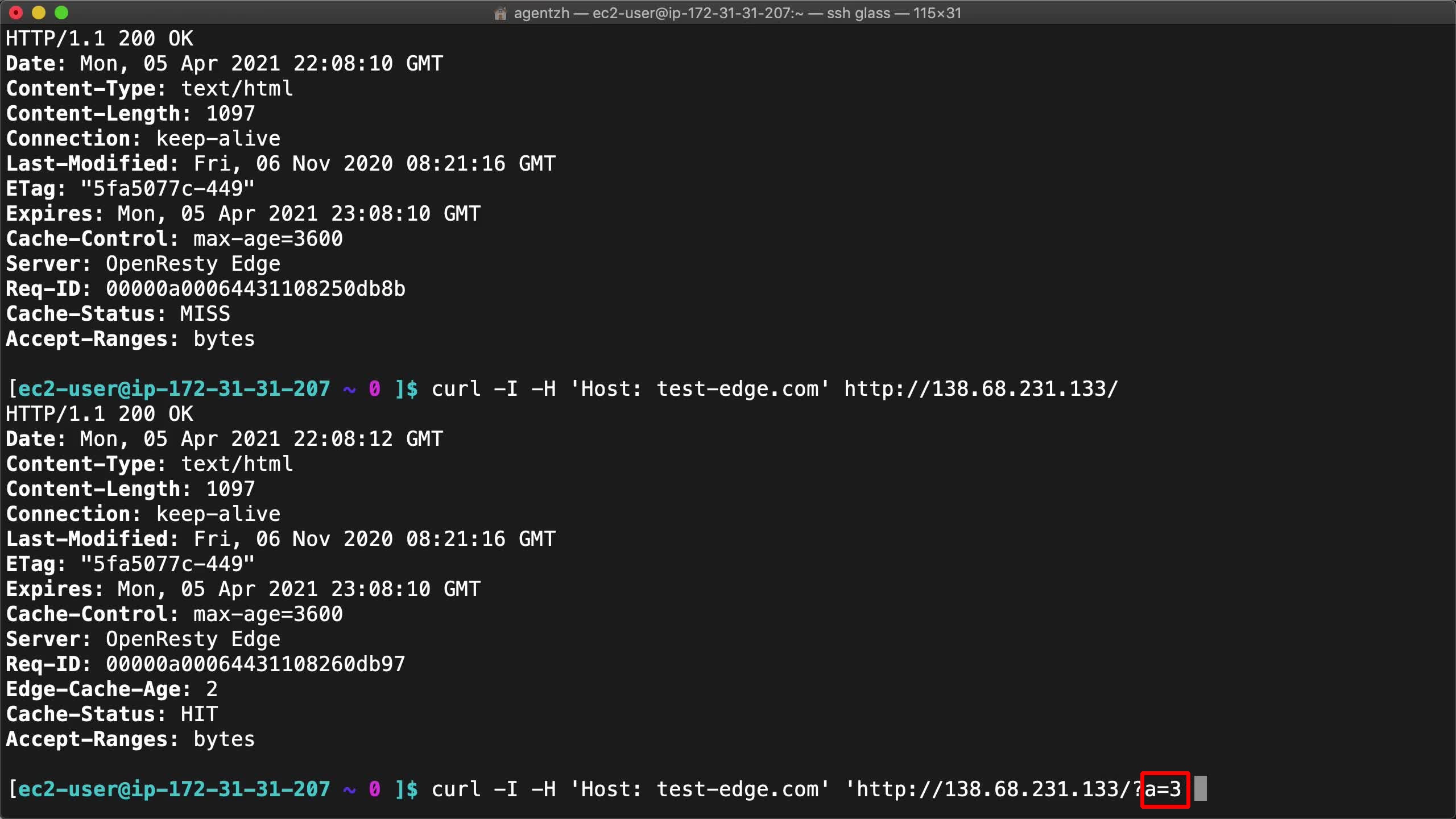

If we add a query string,

curl -I -H 'Host: test-edge.com' 'http://138.68.231.133/?a=3'

Note the a=3 part.

then it will be a cache miss again.

This is because the default cache key includes the query string.

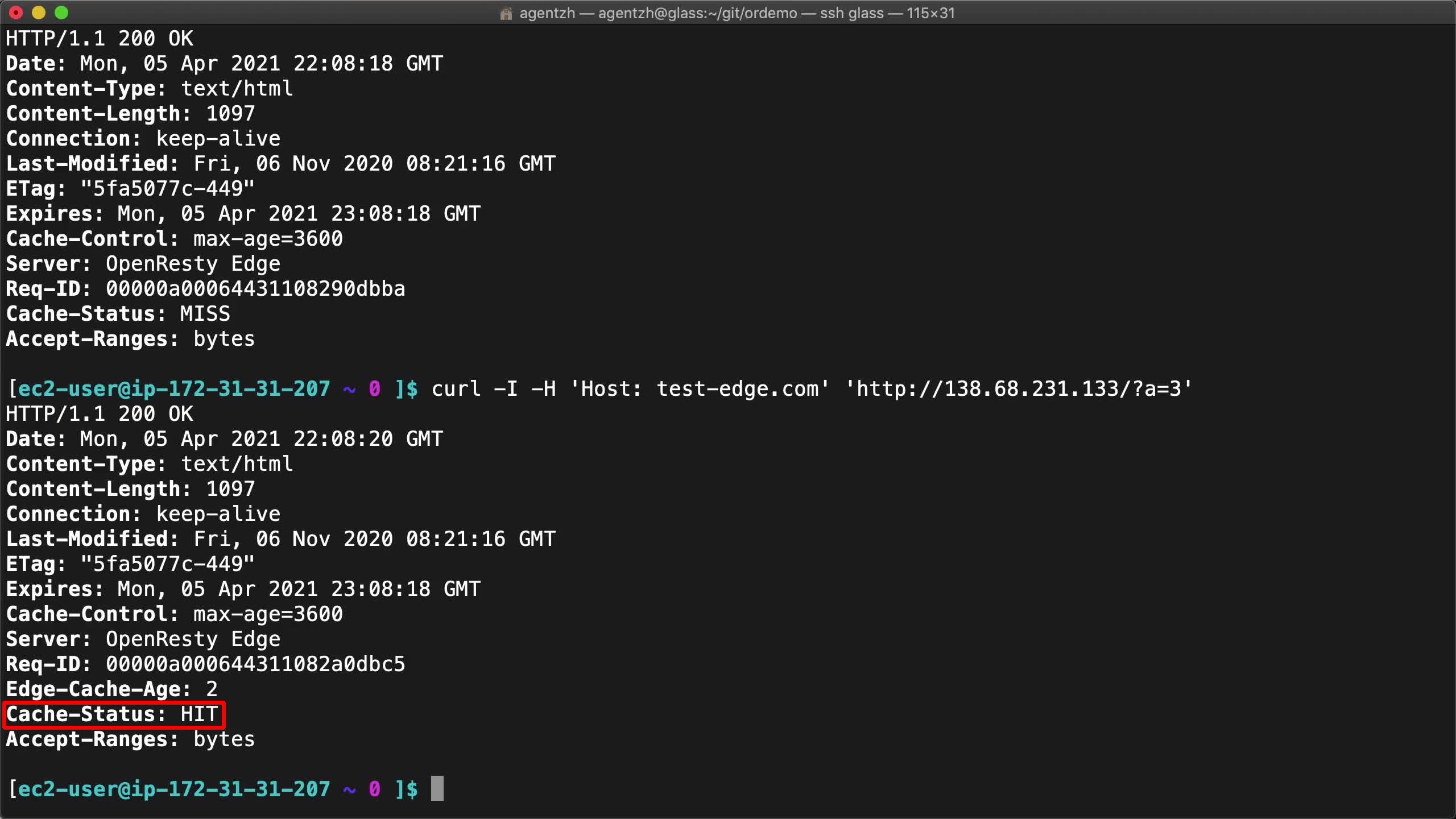

Running the same request again should result in a cache hit.

curl -I -H 'Host: test-edge.com' 'http://138.68.231.133/?a=3'

It’s indeed a cache hit now. If you don’t care about the query string, you can remove it from the cache key.

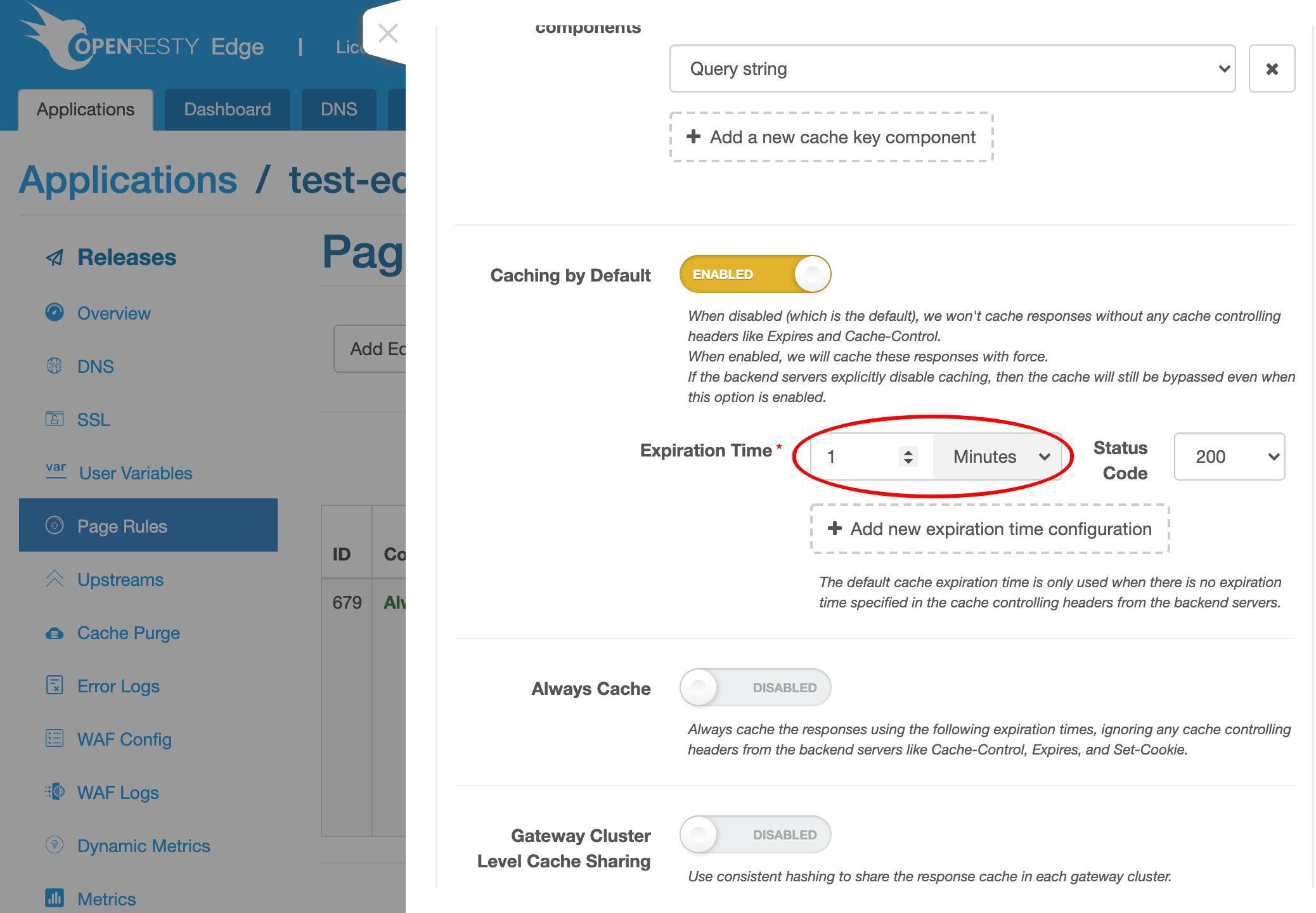

Sometimes we are just too lazy to change the backend server’s configuration. Then we can also enforce caching for responses without any cache controlling headers in the gateway.

We can change our original page rule to enable this feature.

We enforce caching by default.

We can set the default expiration time for cacheable response status codes.

We’ll demonstrate this feature in another video.

What is OpenResty Edge

OpenResty Edge is our all-in-one gateway software for microservices and distributed traffic architectures. It combines traffic management, private CDN construction, API gateway, security, and more to help you easily build, manage, and protect modern applications. OpenResty Edge delivers industry-leading performance and scalability to meet the demanding needs of high concurrency, high load scenarios. It supports scheduling containerized application traffic such as K8s and manages massive domains, making it easy to meet the needs of large websites and complex applications.

If you like this tutorial, please subscribe to this blog site and/or our YouTube channel. Thank you!

About The Author

Yichun Zhang (Github handle: agentzh), is the original creator of the OpenResty® open-source project and the CEO of OpenResty Inc..

Yichun is one of the earliest advocates and leaders of “open-source technology”. He worked at many internationally renowned tech companies, such as Cloudflare, Yahoo!. He is a pioneer of “edge computing”, “dynamic tracing” and “machine coding”, with over 22 years of programming and 16 years of open source experience. Yichun is well-known in the open-source space as the project leader of OpenResty®, adopted by more than 40 million global website domains.

OpenResty Inc., the enterprise software start-up founded by Yichun in 2017, has customers from some of the biggest companies in the world. Its flagship product, OpenResty XRay, is a non-invasive profiling and troubleshooting tool that significantly enhances and utilizes dynamic tracing technology. And its OpenResty Edge product is a powerful distributed traffic management and private CDN software product.

As an avid open-source contributor, Yichun has contributed more than a million lines of code to numerous open-source projects, including Linux kernel, Nginx, LuaJIT, GDB, SystemTap, LLVM, Perl, etc. He has also authored more than 60 open-source software libraries.