The Wonderland of Dynamic Tracing (Part 3 of 3)

This is the last part, Part 3, of the series “The Wonderland of Dynamic Tracing” which consists of 3 parts in total. I will keep updating this series to reflect the state of the art of the dynamic tracing world.

The previous one, Part 2, explains the powerful visualization method, Flame Graphs, in great detail and introduced the common methodologies used in troubleshooting processes involved in dynamic tracing. This part will look at the Linux kernel support needed by dynamic tracing, as well as some other more exotic tracing requirements like tracing hardware and “corpses” of dead processes and cover traditional debugging technologies and the modern dynamic tracing world in general.

Dynamic Tracing Support in Linux Kernels

As I said earlier, dynamic tracing is generally based on the operating system kernel. The Linux OS kernel is now widely used. But its support for dynamic tracing has a long history of hardships. One possible reason is that Linus Torvalds, the creator of Linux, used to deem dynamic tracing unnecessary.

At first, engineers of Red Hat prepared the so-called utrace patch for the Linux kernel to support user-mode dynamic tracing technology. This was the basis on which frameworks like SystemTap were originally built. For a long time thereafter, the utrace patch dominated as the default in Linux release versions of the Red Hat family, such as RHEL, CentOS, and Fedora. During that period, SystemTap made sense only in the operating systems of the Red Hat series. In the end, however, the Linux kernel in the mainline version did not incorporate the patch, which was replaced by a compromise.

Kprobes mechanism, long ago introduced by the Linux mainline version dynamically inserts probes at the entry, exit and other addresses of the designated kernel function, and defines its own probe processing routines.

User-mode dynamic tracing support finally came after endless discussions and repeated revisions. The Version 3.5 of the official Linux kernel started using the inode-based uprobes kernel mechanism – able to safely place dynamic probes at the exit and other addresses of the user-mode function and execute its own probe processing routines. One of its successors Version 3.10 included the uretprobes mechanism1 – designed with the function to further place dynamic probes at the return address of the user-mode function. Combined, uprobes and uretprobes ultimately replaced the primary functions of the utrace patch, the mission of which ended then. New versions of the SystemTap kernel can also automatically merge with uprobes and uretprobes, with no reliance anymore on the utrace patch.

Linux mainline kernel developers have created eBPF in recent years as a kind of more general-purpose kernel virtual machine. The so-called eBPF was extended from Berkeley Packet Filter (BPF), the dynamic compiler previously used in the Netfilter with firewall functions. The introduction of eBPF forms a mechanism that allows the developers to construct a dynamic tracing virtual machine resident in the Linux kernel, similar to DTrace. In fact, some efforts have surfaced earlier in this respect. An example is BPF Compiler Collection (BCC), which invokes the LLVM toolchain to compile C code into bytecode understandable to the eBPF virtual machine. Anyway, the dynamic tracing support in Linux is generally becoming better and better. This is especially true since the version of Linux kernel 3.15, as the dynamic tracing-related kernel mechanism eventually becomes more robust and stable. Unfortunately, the ongoing serious restrictions in eBPF design have long confined dynamic tracing tools developed thereon to a relatively simple level, or what I call the “Stone Age” of such tools. Although SystemTap has recently started to support eBPF runtime, the stap language attributes supported by it are extremely limited. Even the chief developer of SystemTap Frank Ch. Eigler has expressed his worries about that limitation.

Hardware Tracing

Since dynamic tracing can play a crucial role in the analysis of software systems, you must be wondering whether similar approaches and ideas are applicable to hardware tracing.

Everyone knows operating systems have a direct connection to hardware. So, tracing drivers or other parts of the operating system offers an indirect way to analyze certain behaviors and problems of the hardware device connected to the system. Some features of modern hardware also make hardware tracing possible. Hardware like Intel CPU often has built-in performance statistics registers (Hardware Performance Counter). After extracting information from those special registers via software, a swarm of information directly related to hardware will spring up. The perf tool in Linux came into being for such purposes. Even virtual machine software VMWare goes about emulating such special hardware registers. Based on them, debugging tools like Mozilla rr were also created to record and replay deterministically process execution.

Directly inserting probes into hardware and performing dynamical tracing might be a science fiction story, but I’m looking forward to hearing from anyone willing to share their insights and information on it.

Analyze Remains of Dead Process

Before this part, we have been talking about how to analyze live processes or running processes. Then how to deal with dead processes? When a process is dead, in most circumstances, it means the process has crashed abnormally, producing the so-called core dump file. Though dead, they leave “remains” available for in-depth analysis, making it possible to find the cause of their death. In this sense, programmers are also tasked with「forensic」examinations.

The well-known GNU Debugger (GDB) is the most classic tool to analyze the remains of dead processes. In the LLVM world, its counterpart is called LLDB. One of the obvious weaknesses of GDB is that its native command languages are very limited. If core dump files are analyzed manually, one command after another, information from that analysis will be very limited as well. Engineers are not doing much better in its analysis. Most engineers check the current C call stack trace using the bt full command or initiate the info reg command to view the current value of each CPU register or check the computer code sequence of the crash location, and so on. But in fact, more information is hidden deep inside all sorts of complicated binary data structures allocated in the heap. Scanning and analyzing those structures apparently relies on automation, calling for a programmable approach to write complex core dump analysis tools.

In response to this need, GDB has updated versions (starting from Version 7.0, if memory serves) featuring built-in support for Python scripts. Python can now serve the implementation of relatively complicated GDB commands, allowing an in-depth analysis of core dump and the like. I’ve also written a host of GDB-based advanced debugging tools with the help of Python, many of which even have a one-to-one correspondence to SystemTap tools analyzing live processes. As is the case in dynamic tracing, debugging tools help blaze a trail to light out of the dark “dead world”.

However, this approach places a significant burden on the tool development and porting. Using the Python scripting language to traverse C-style data structure is not that fun. You know, keeping writing Python codes so weirdly may drive one crazy. The burden is also evident in the tasks of writing first in SystemTap scripting language and then in GDB Python codes, for the same tool. Both require meticulous development and testing. While the nature of the work is similar, the two tasks are entirely different in implementation code and the corresponding API (worth mentioning is that LLDB tool in the LLVM world also has similar Python programming support, but its Python API is not compatible with that of GDB).

Of course, GDB can also be used to analyze live programs. But compared with SystemTap, GDB is significantly inferior in performance. My hands-on experience of comparing the SystemTap version and GDB Python version of a complicated tool pointed to an order-of-magnitude difference between their performance. GDB is anything but designed for online analysis like this. but rather, more interactive usage is considered. It is true that GDB can be run in batch mode, but the internal implementation imposes serious restrictions on its performance. Among other things, nothing makes me angrier than longjmp misuse inside GDB in the case of routine error handling, which results in grave performance losses, as can be clearly seen in the GDP flame graph generated by SystemTap. As for analyzing dead processes, the good news is time would not be a problem as offline analysis is always a choice. But even offline work might still be frustrating given that some very complicated GDB Python tools need to run for a couple of minutes.

I ever adopted SystemTap to analyze the performance of GDB + Python, and the flame graph revealed the two biggest execution hotspots inside GDB. Then I recommended two C patches to GDB, one for Python character string operations, and the other[ for how to handle GDB errors](https://sourceware.org/ml/gdb-patches/2015-02/msg00114.html). With them, the most complicated GDB Python tools can run at double speed on the whole. GDB has officially merged with one of them. So you see, applying dynamic tracing to analyze and improve traditional debugging tools is also full of fun.

Part of my work was to write GDB Python debugging tools. Many are open-source on GitHub, usually in repositories like nginx-gdb-utils , and primarily concerning Nginx and LuaJIT. Through these tools, I assisted LuaJIT author Mike Pall in uncovering more than 10 bugs inside LuaJIT. Mostly hidden for years, they were undetectable in the JIT compiler.

Since dead processes have no way of changing with the passage of time, I think the corresponding analysis of core dump can be tentatively called a “static tracing”.

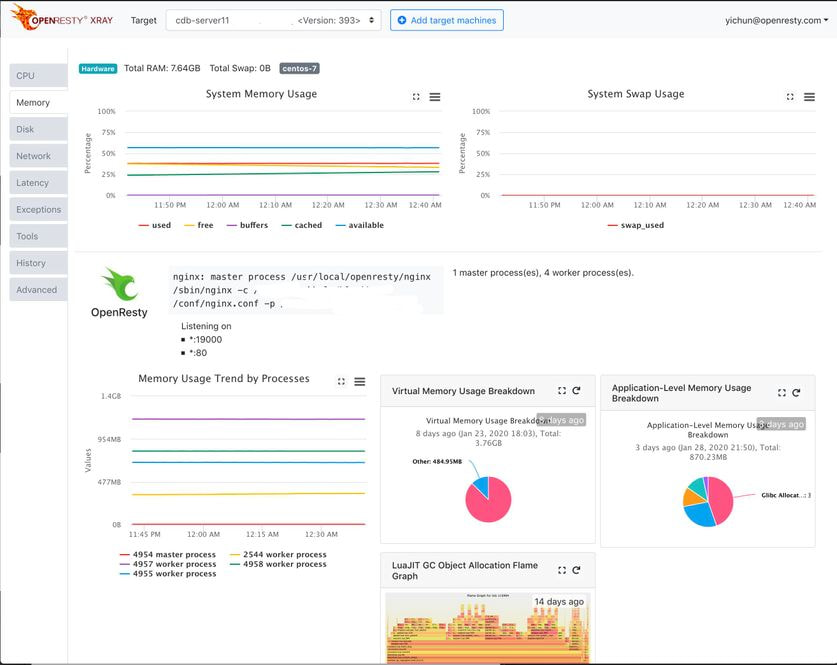

Relying on the Y language compiler, OpenResty XRay product allows analyzing tools written in Y language to support GDB platform at the same time, thus automating the in-depth analysis of core dump files.

Traditional Debugging Technologies

When it comes to GDB, I must mention the differences and connections between dynamic tracing and traditional debugging approaches. Experienced engineers, if they are careful enough, should have noticed that dynamic tracing has its “predecessor” which sets breakpoints in GDB before a host of examinations are made there. They are different in that dynamic tracing always pursues non-interactive batch processing as well as the lowest possible performance losses. In contrast, the implementation in GDB, designed for interactive operations, does not consider production safety or prioritize performance losses, making them have an extremely huge performance impact in most cases. Meanwhile, GDB is based on the very old ptrace system call which has a great variety of pitfalls and problems. For example, ptrace needs to change the father of the target debugging process and forbids multiple debuggers from simultaneously analyzing the same process. In a certain sense, GDB can simulate “low-level dynamic tracing”.

I see many programming beginners performing “single-step execution” with GDB. This, however, is often a very inefficient way in the production and development of the real industrial community. The reason for this is that changes in program execution sequence often come with single-step execution, and thus prevent plenty of sequence-related problems from recurring. In addition, when software systems are complicated, executors tend to get stuck in separate code paths and lose sight of the whole picture.

Given those factors, my suggestion is to take the seemingly stupidest yet simplest approach of printing and outputting statements on key code paths to handle debugging in daily development. Outputs such as viewing logs can deliver a complete context, in which the result of program execution can be well analyzed. This approach, when combined with test-driven development, will prove to be particularly efficient. The previous discussions have sufficed to demonstrate the impracticability of adding logs and event tracking codes in online debugging. Also, traditional performance analysis tools, including DProf in Perl, gprof in the C world, and performance profilers for other languages and environments, often require recompiling programs with special options or rerunning them in a special way. Such performance analysis tools, which require special handling and cooperation, are obviously not suitable for online, real-time live analysis.

A Messy World of Debugging

Today’s debugging world is a real mess, being flooded with DTrace, SystemTap, ePBF/BCC, GDB, LLDB, and many other things not mentioned here. Relevant information can be found online. Their number may just be a reflection of the disorder of the real world we are in.

An idea that used to linger in my mind for years was to design and create a universal debugging language. My efforts in OpenResty Inc. made the dream a reality. It’s called Y language, whose compilers can automatically generate input codes acceptable to various debugging frameworks and technologies. Among them is D language code for DTrace, stap script for SystemTap, Python script for GDB, another incompatible API Python script of LLDB, bytecode acceptable to eBPF, and even a mixture of C and Python codes acceptable to BCC.

I pointed out earlier the workload is very high in manually porting a designed debugging tool into different debugging frameworks. But if the said Y language is in place, its compilers will be able to automatically convert the same Y code into input codes tailored for diverse debugging platforms and carry out automatic optimization for these platforms. We would only need to write each debugging tool in Y once. This would be a huge relief. And debuggers will be free from the trouble of studying all those fragmented details about debugging technologies and the danger of falling into the “traps” in each of them.

The Y language is included in OpenResty XRay and has been available to its users.

Some may wonder where the name Y originates. From a personal perspective, Y is the first letter of my name, Yichun. Of course, the more important implication lies in the nature of the language. Y language is created to answer questions starting with “why”, which has the same pronunciation as Y.

OpenResty XRay

As a commercial product of OpenResty Inc., OpenResty XRay aids users in delving deep into specific behaviors of different kinds of software systems, whether online or offline and accurately analyzing and identifying various problems in terms of performance, reliability, and security, without needing any cooperation from the target program. Its technology ensures more powerful tracing functions than SystemTap, and its performance and accessibility far surpass the latter. Besides, it supports automatically analyzing program remains like core dump files.

Conclusion

This part of the series looked at the Linux kernel support needed by dynamic tracing, as well as some other more exotic tracing needs like tracing hardware and “corpses” of dead processes and covered traditional debugging technologies and the modern dynamic tracing world in general.

A Word on OpenResty XRay

OpenResty XRay is a commercial dynamic tracing product offered by our OpenResty Inc. company. We use this product in our articles like this one to demonstrate implementation details, as well as provide statistics about real-world applications and open-source software. In general, OpenResty XRay can help users gain deep insight into their online and offline software systems without any modifications or any other collaborations, and efficiently troubleshoot difficult problems for performance, reliability, and security. It utilizes advanced dynamic tracing technologies developed by OpenResty Inc. and others.

We welcome you to contact us to try out this product for free.

About The Author

Yichun Zhang (Github handle: agentzh), is the original creator of the OpenResty® open-source project and the CEO of OpenResty Inc..

Yichun is one of the earliest advocates and leaders of “open-source technology”. He worked at many internationally renowned tech companies, such as Cloudflare, Yahoo!. He is a pioneer of “edge computing”, “dynamic tracing” and “machine coding”, with over 22 years of programming and 16 years of open source experience. Yichun is well-known in the open-source space as the project leader of OpenResty®, adopted by more than 40 million global website domains.

OpenResty Inc., the enterprise software start-up founded by Yichun in 2017, has customers from some of the biggest companies in the world. Its flagship product, OpenResty XRay, is a non-invasive profiling and troubleshooting tool that significantly enhances and utilizes dynamic tracing technology. And its OpenResty Edge product is a powerful distributed traffic management and private CDN software product.

As an avid open-source contributor, Yichun has contributed more than a million lines of code to numerous open-source projects, including Linux kernel, Nginx, LuaJIT, GDB, SystemTap, LLVM, Perl, etc. He has also authored more than 60 open-source software libraries.

Translations

We provide a Chinese translation for this article on blog.openresty.com We also welcome interested readers to contribute translations in other languages as long as the full article is translated without any omissions. We thank anyone willing to do so in advance.

-

uretprobes has big problems in its implementation. This is because it will directly modify the system runtime stack, thus breaking a lot of important features like stack unwinding. Our OpenResty XRay implements a new uretprobes mechanism from scratch without all those shortcomings. ↩︎